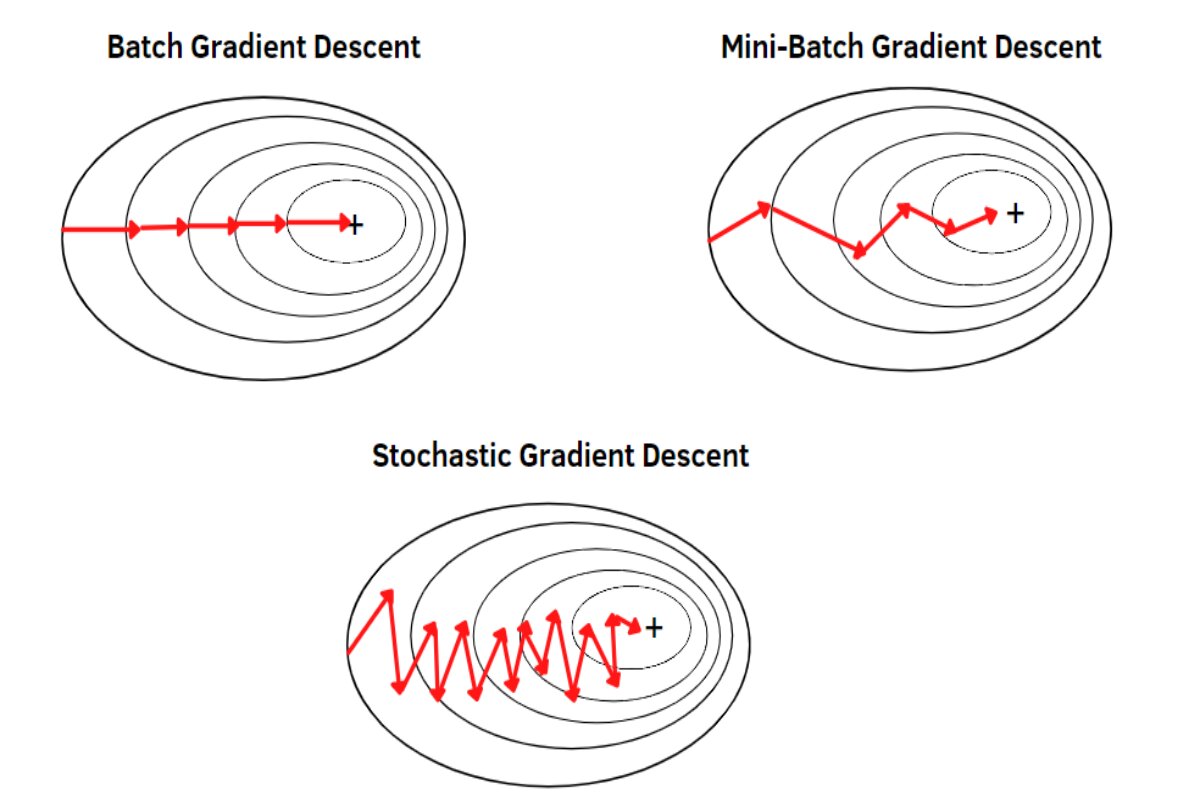

EfficientDL: Mini-batch Gradient Descent Explained

Mini-batch Gradient Descent is a compromise between Batch Gradient Descent (BGD) and Stochastic Gradient Descent (SGD). It involves updating the

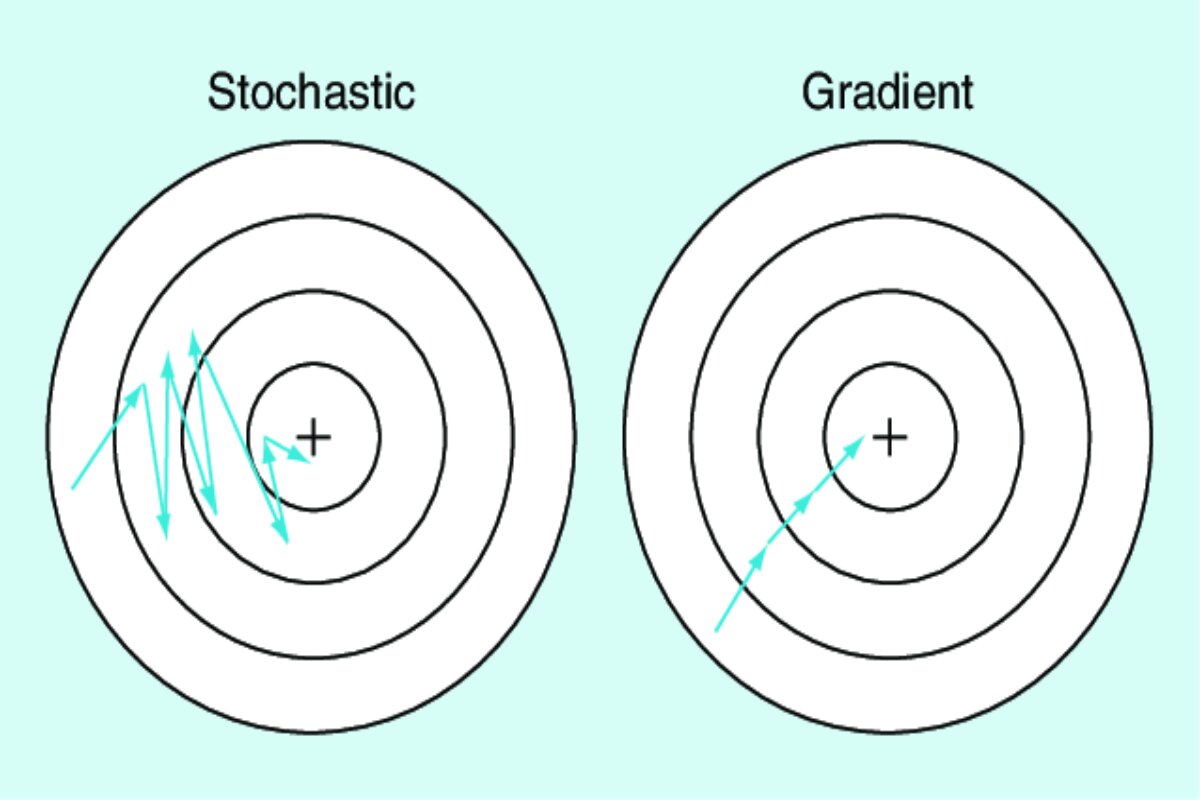

Efficient Opti: Mastering Stochastic Gradient Descent

Stochastic Gradient Descent (SGD) is a variant of the Gradient Descent optimization algorithm. While regular Gradient Descent computes the gradient

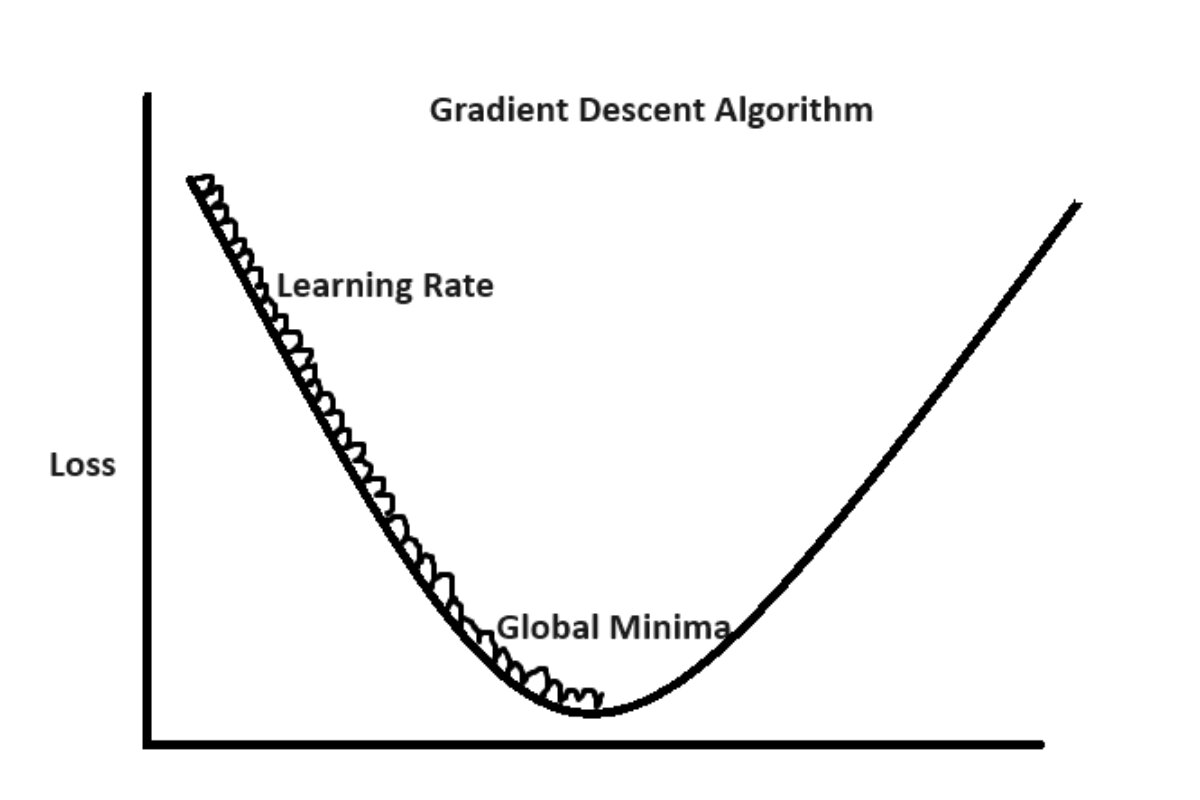

OptiLearn: Mastering Gradient Descent

Gradient Descent is a fundamental optimization algorithm widely used in training deep learning models. It's a process that helps the

Revolutionizing Deep Learning: Types of Optimization Methods

Optimization functions play a pivotal role in training machine learning models, especially in deep learning. Different types of optimization functions

Exploring The Role of Optimization Functions Across Sectors

An optimization function, often referred to as a cost function or fitness function, is a fundamental component in mathematical optimization.

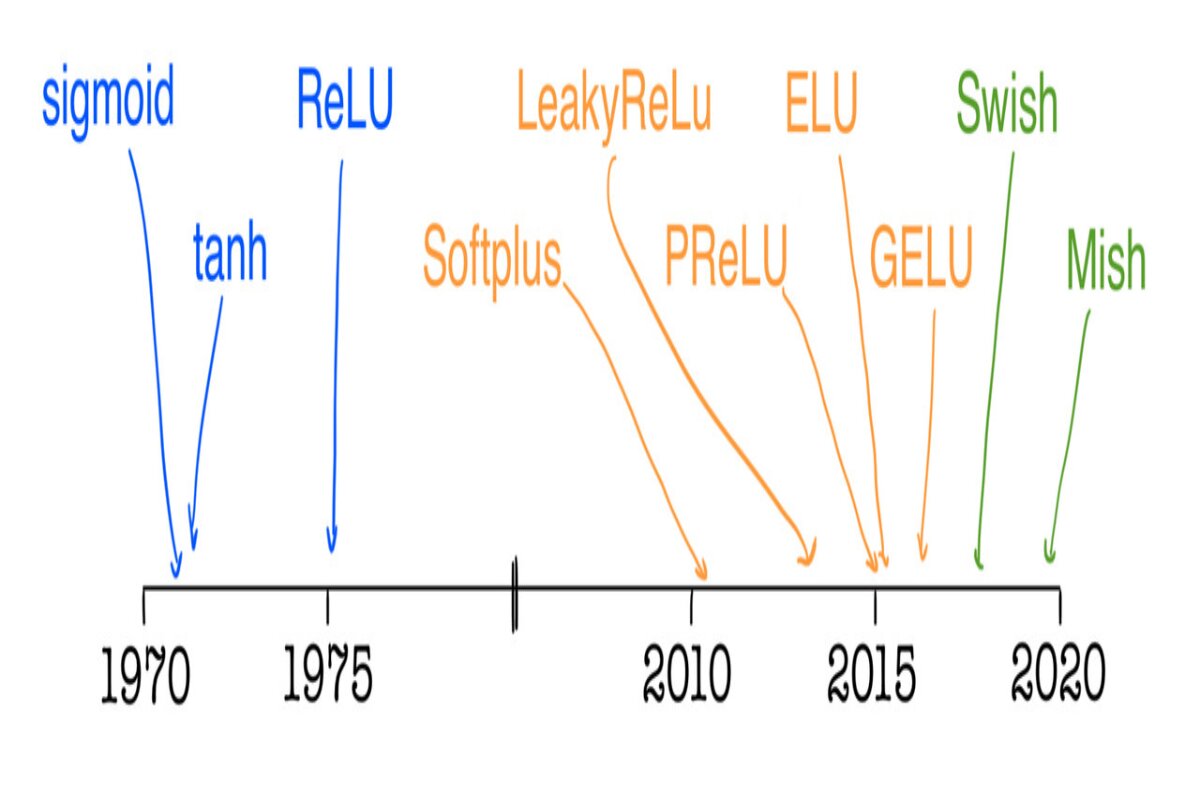

Mastering Activation Functions: Unleashing Neural Power

Activation Function:At the heart of artificial neural networks, an activation function plays a crucial role in introducing non-linearity to the

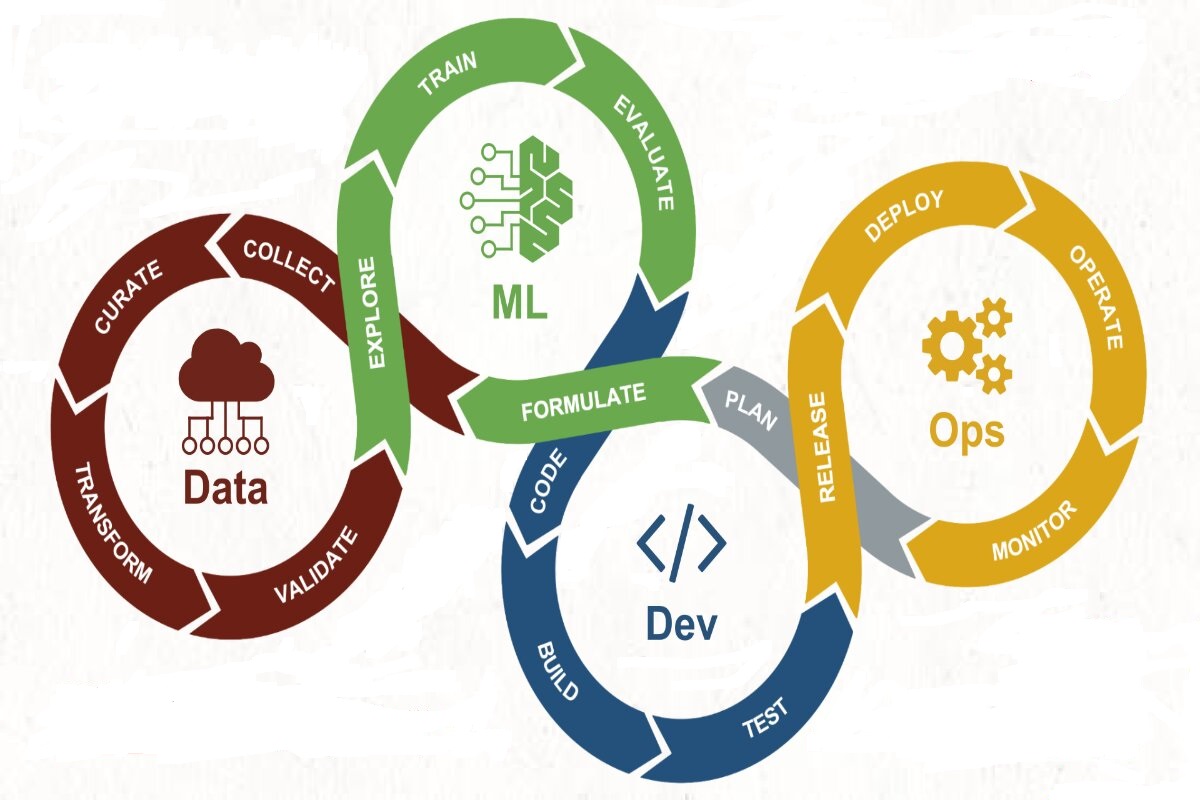

Elevating ML Model Performance: The Power of MLOps

What is MLOps?MLOps, short for "Machine Learning Operations," is a set of practices and principles that combine machine learning, data

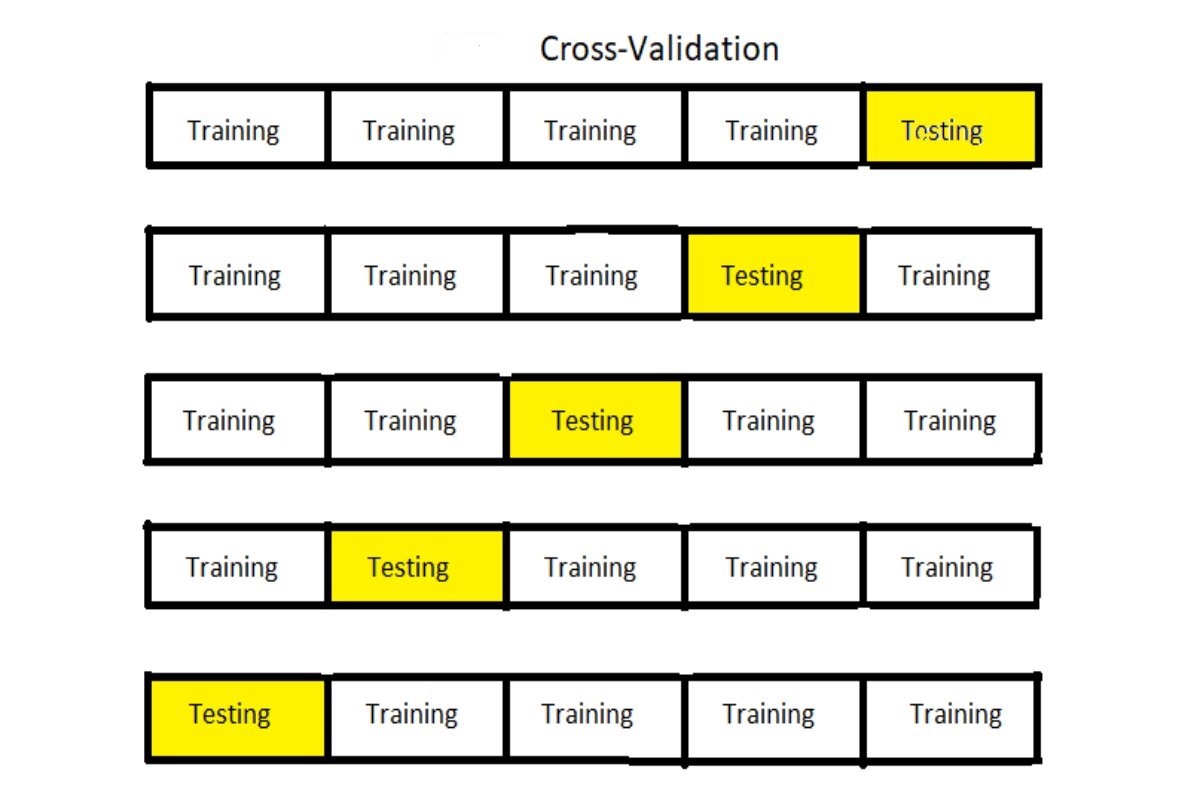

Cross-Validation: Unveiling Model Performance

Cross-validation is a fundamental technique used in machine learning and statistics to assess the performance of a predictive model and

Navigating Outliers for Accurate Data Analysis & Decisions

In this blog, we are going to see info about the outliers in machine learning.Definition of outliersTypes of OutliersUnderstanding the

Navigating the Landscape of Natural Language Processing

Natural Language Processing (NLP) represents a comprehensive discipline that merges the realms of linguistics, computer science, and artificial intelligence. At