Mastering Git Commands: From Workspace to Repository

The git command is used to transfer the files from the project folder which is also known as a workspace

Celebrating a New Chapter: Lavkesh Garg Joins StatusNeo as Delivery and Customer Success Leader

We are delighted to welcome Lavkesh Garg to the StatusNeo family as Vice President, Delivery and Customer Success Leader. Lavkesh will

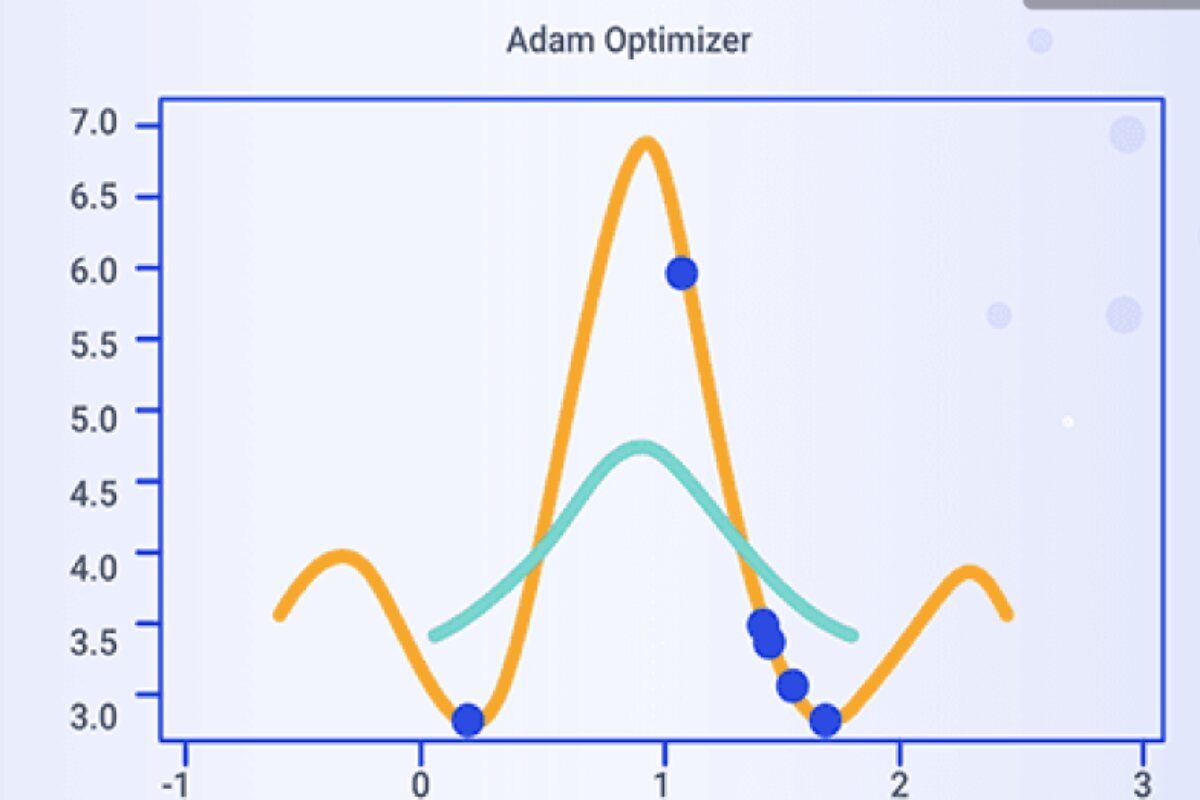

Adam: Efficient Deep Learning Optimization

Adam (Adaptive Moment Estimation) is an optimization algorithm commonly used for training machine learning models, particularly deep neural networks. It

Boosting Neural Network: AdaDelta Optimization Explained

AdaDelta is a gradient-based optimization algorithm commonly used in machine learning and deep learning for training neural networks. It was

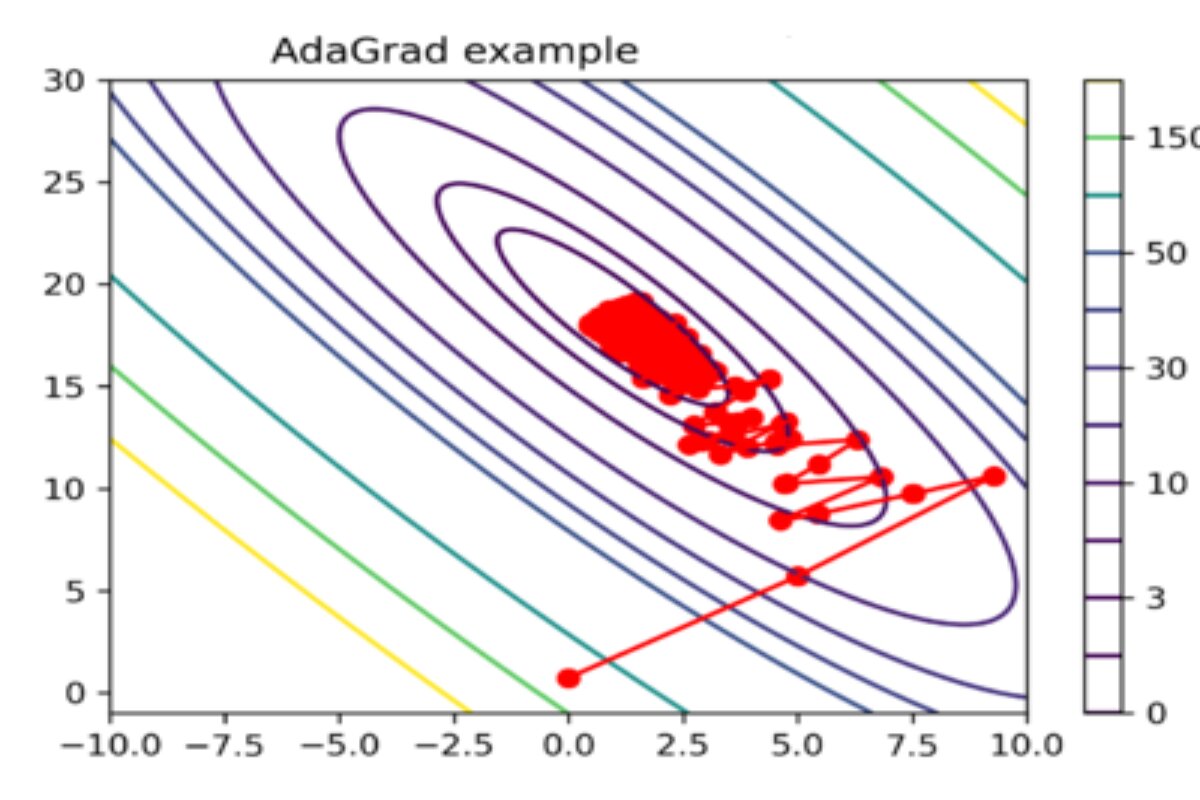

Adaptive Gradient Optimization Explained

AdaGrad stands for Adaptive Gradient Algorithm. It is a popular optimization algorithm used in machine learning and deep learning for

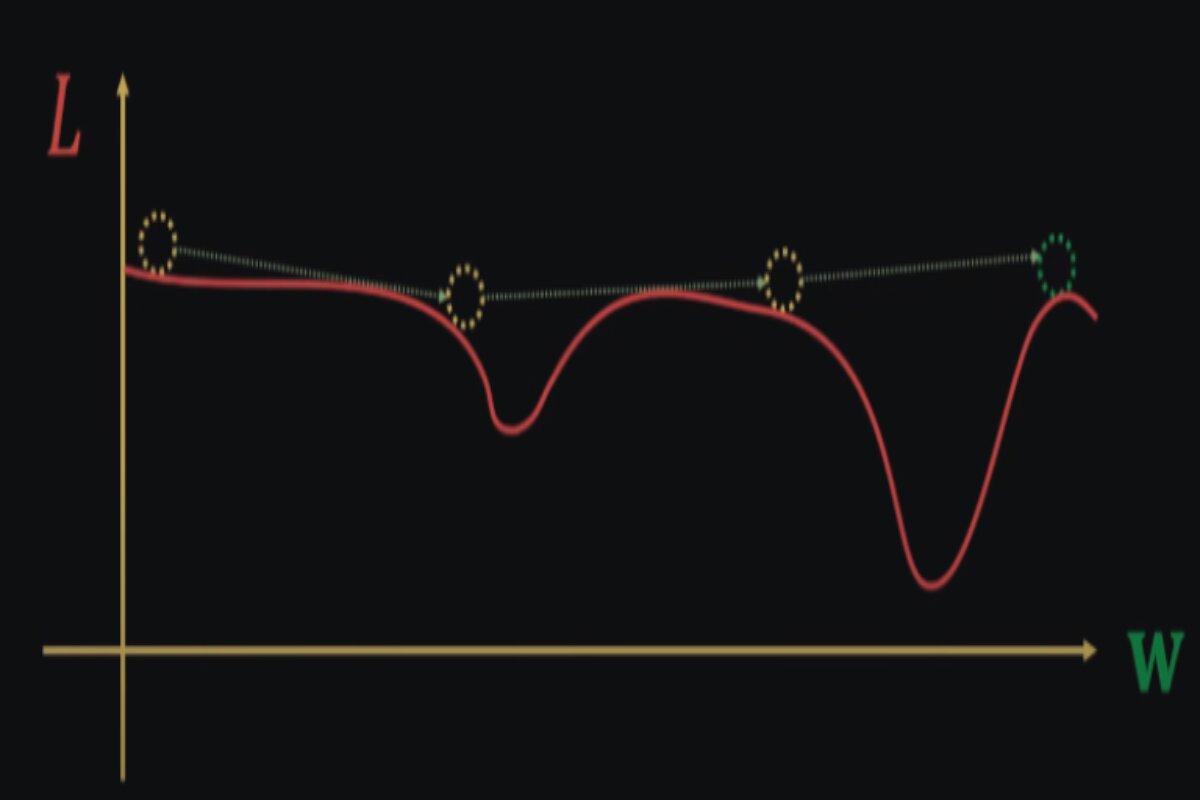

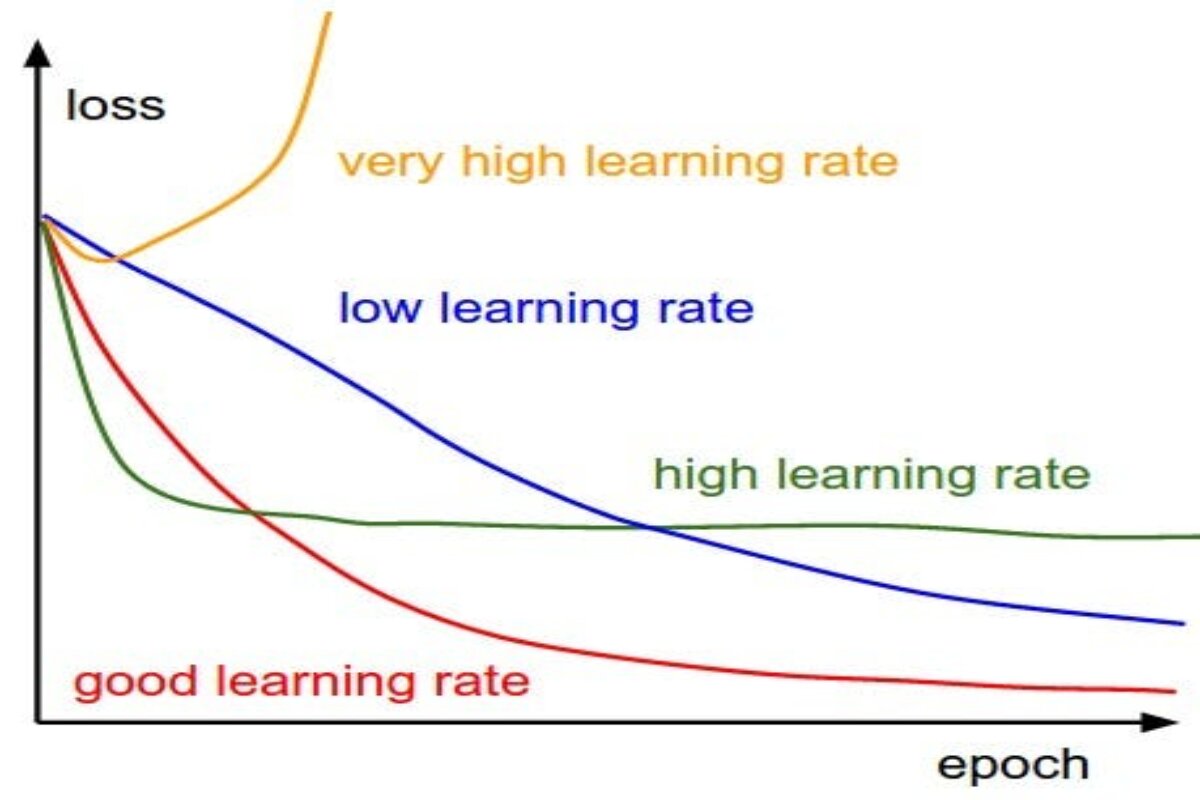

Mastering Optimization: Dynamic Learning Rates Unveiled

Definition of Traditional Optimization Functions:- Traditional optimization functions are mathematical algorithms and techniques used in machine learning to fine-tune model

Elevating Your Journey: Personalized Digital Services in Air Travel

Traveling by air has always been an exciting adventure, but it's no secret that navigating airports and airlines can sometimes

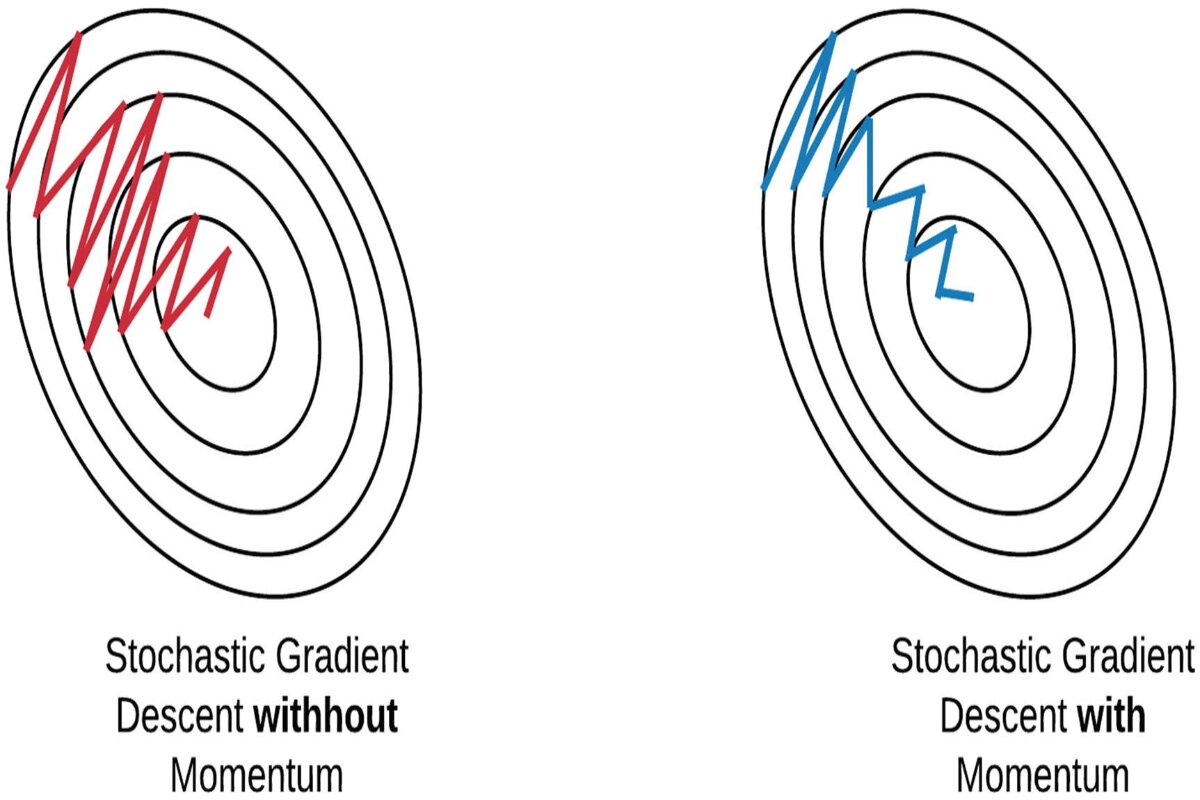

Accelerate Convergence: Mini-batch Momentum in Deep Learning

Imagine you're climbing a hill, and you want to find the quickest way to reach the top. There are different

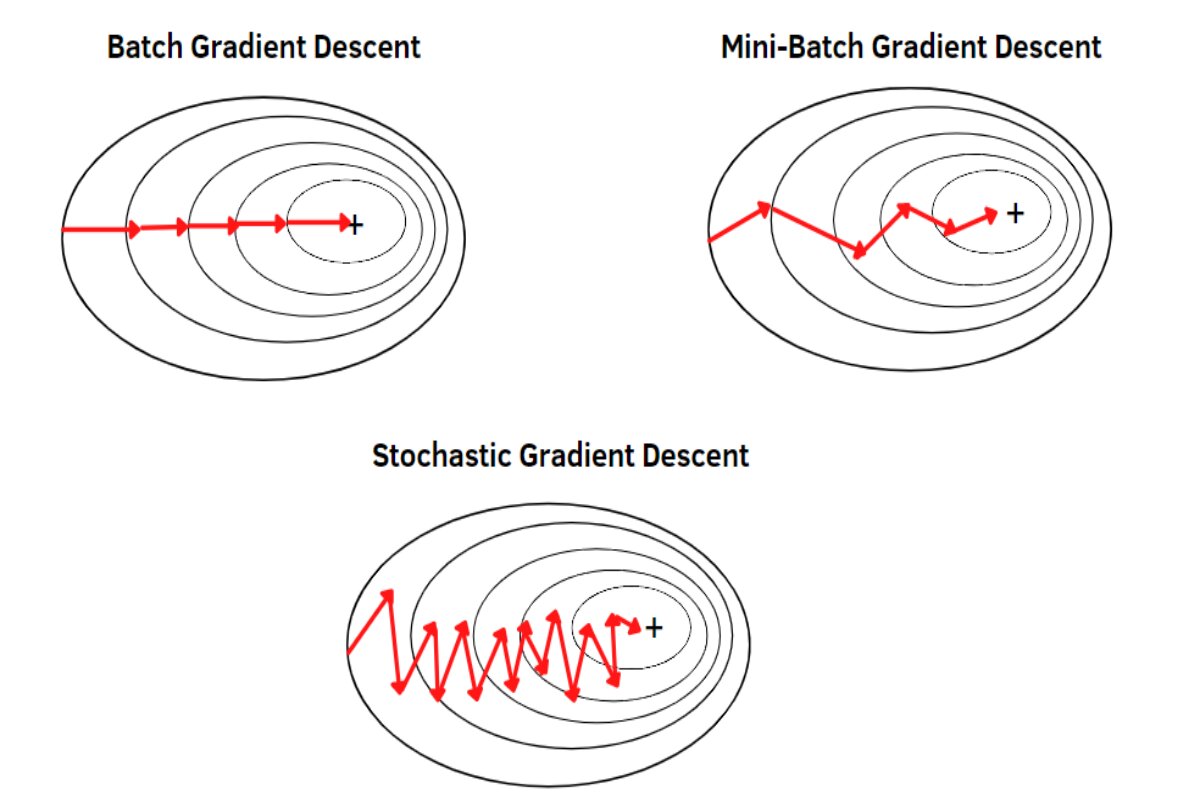

EfficientDL: Mini-batch Gradient Descent Explained

Mini-batch Gradient Descent is a compromise between Batch Gradient Descent (BGD) and Stochastic Gradient Descent (SGD). It involves updating the

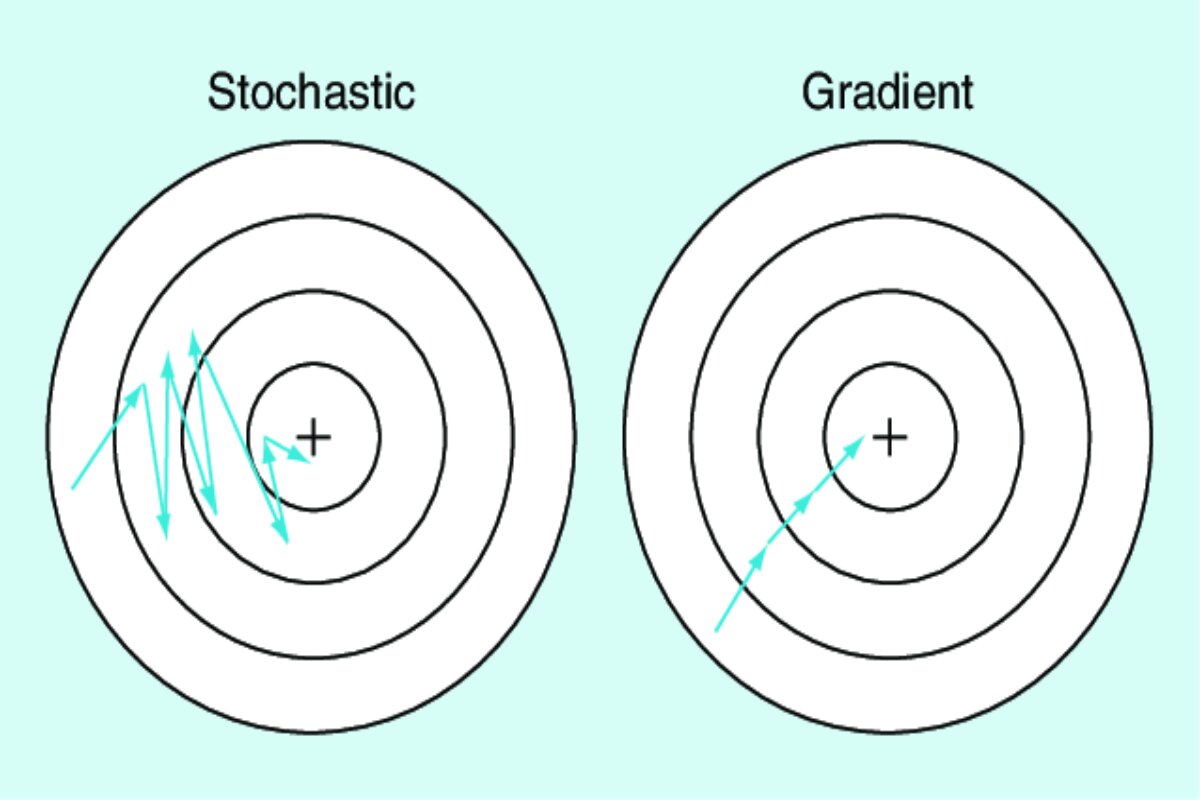

Efficient Opti: Mastering Stochastic Gradient Descent

Stochastic Gradient Descent (SGD) is a variant of the Gradient Descent optimization algorithm. While regular Gradient Descent computes the gradient