Kafka with Spring Boot using docker-compose

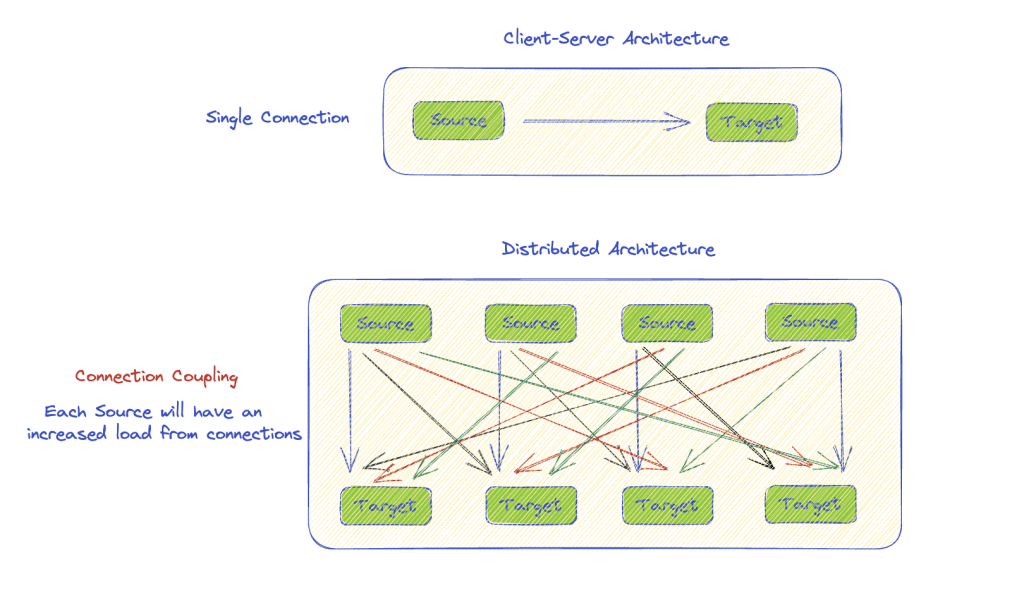

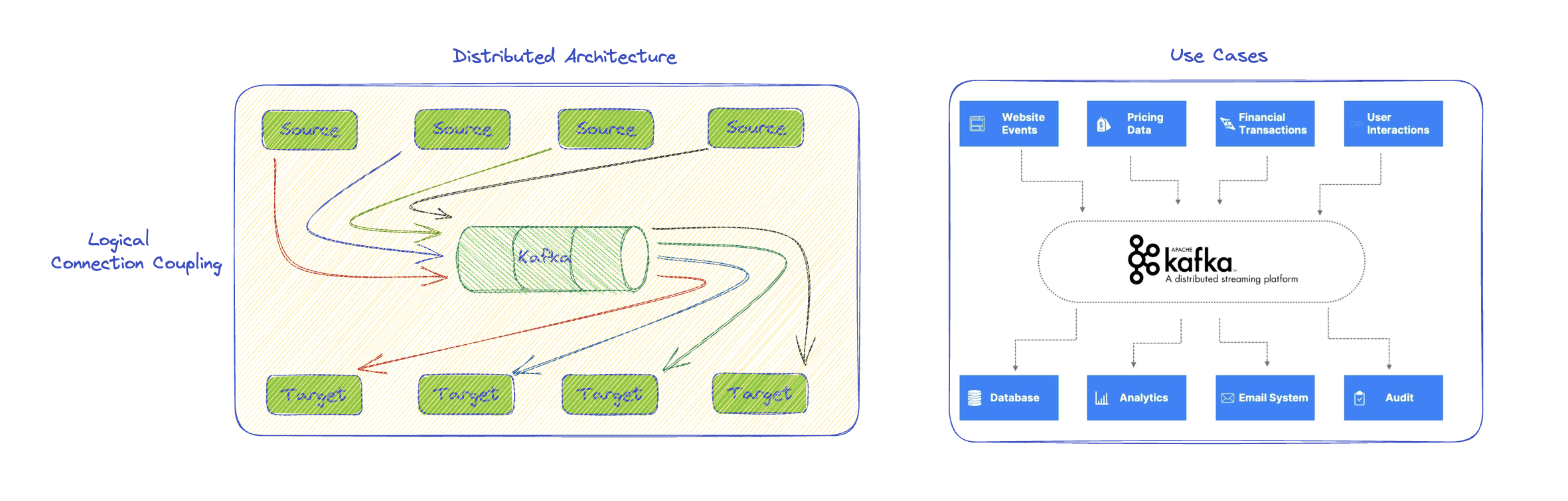

Prior understanding to Kafka, we should understand the problem Kafka try to solve. In simple Client-Server architecture source machine needs to create a connection with target machine, however an enterprise or distributed architecture can have multiple sources and target machines. Thus each source will increase connection load on source machines.

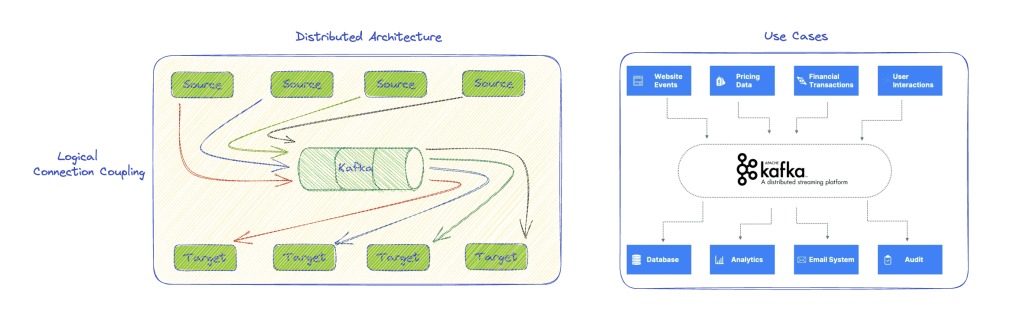

Kafka takes the ownership to handle the connection management and act as middleware system between source and target systems. Thus instead of direct load on target, each source can make connection with Kafka.

Kafka is a distributed event streaming

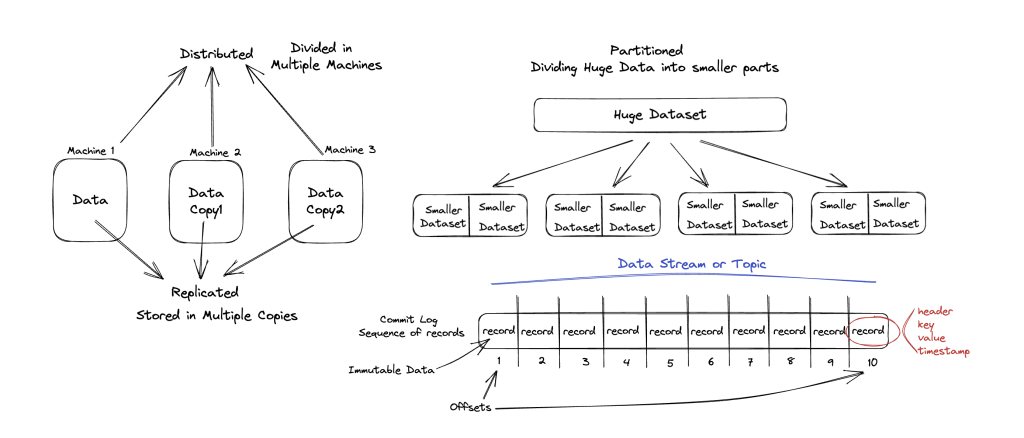

Wait what do we mean by distributed event streaming?

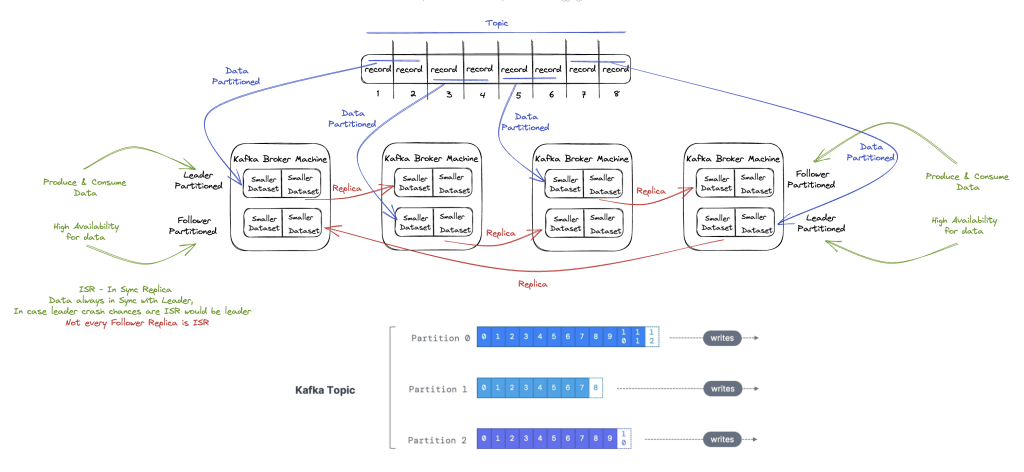

Kafka broker(server) distributes data into multiple machines, even makes the copies(Replicas) of data in different machine, so that if one machine will down, still data can be access from replica. Below diagram illustrate the base idea which is self explanatory.

Conclusion Diagram on Kafka Terminologies

Instead of making a long blog, I have tried to conclude the understanding in illustrative way, as our agenda is to create and run a Kafka cluster with interactive UI using spring boot and docker compose. Still an image can help others to understand the terminologies.

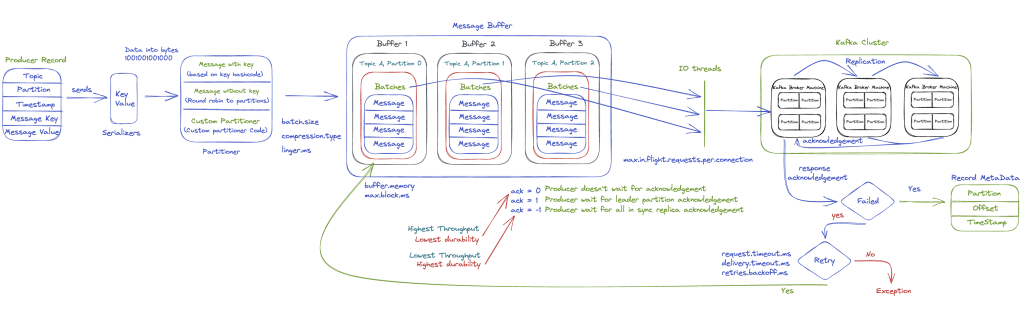

Producer Consumer communication illustrative understanding

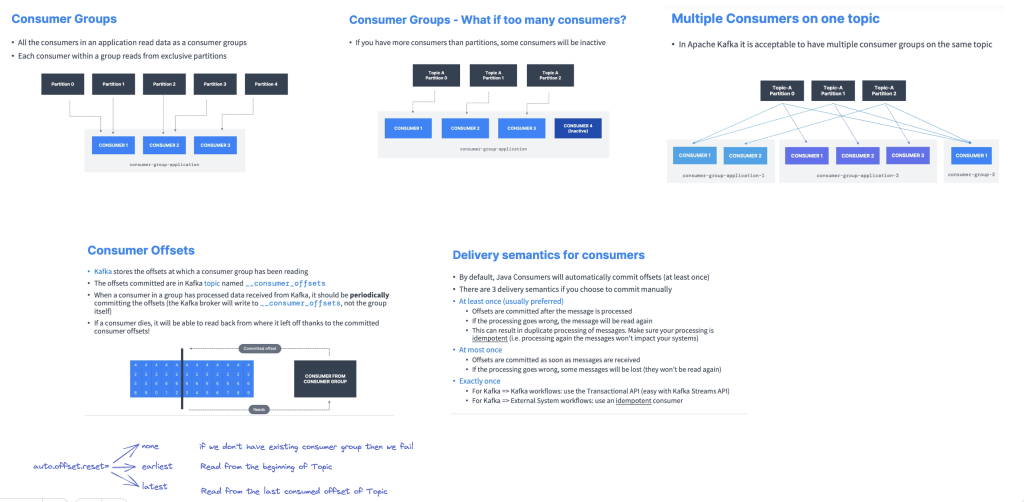

Consumer Group and Offsets illustrative understanding

Code Base

Real issue with getting started with Kafka is it’s setup, to over come this problem just install docker desktop in your system and create below file.

Create a docker-compose.yaml

version: '3'

services:

zookeeper:

image: confluentinc/cp-zookeeper:latest

environment:

ZOOKEEPER_CLIENT_PORT: 2181

ZOOKEEPER_TICK_TIME: 2000

ports:

- 22181:2181

kafka:

image: confluentinc/cp-kafka:latest

depends_on:

- zookeeper

ports:

- 29092:29092

environment:

KAFKA_BROKER_ID: 1

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka:9092,PLAINTEXT_HOST://localhost:29092

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT

KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

kafka-ui:

image: provectuslabs/kafka-ui:latest

depends_on:

- kafka

ports:

- 8090:8080

environment:

KAFKA_CLUSTERS_0_NAME: local

KAFKA_CLUSTERS_0_BOOTSTRAPSERVERS: kafka:9092

KAFKA_CLUSTERS_0_ZOOKEEPER: zookeeper:2181Start Kafka using docker compose

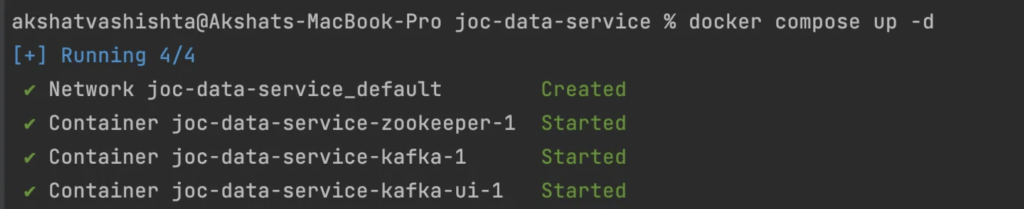

### Start Kafka using your terminal or cmd

docker compose up -d

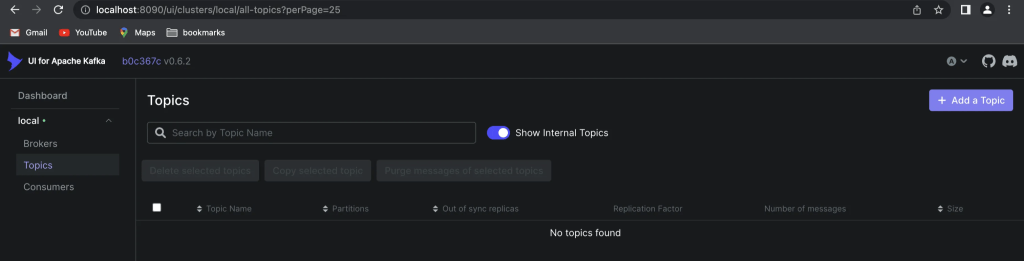

### Access Kafka UI and Add a topic http://localhost:8090/ ### Stop Kafka using your terminal or cmd docker compose down

Create a spring boot project with Kafka dependency

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>Application yml configuration

spring:

kafka:

consumer:

auto-offset-reset: earliest

bootstrap-servers: localhost:29092

group-id: replace with your group id

key-deserializer: org.apache.kafka.common.serialization.StringDeserializer

value-deserializer: org.springframework.kafka.support.serializer.JsonDeserializer

properties:

spring.json.trusted.packages: com.learning.events # change this with your event packages

producer:

acks: -1

bootstrap-servers: localhost:29092

key-serializer: org.apache.kafka.common.serialization.StringSerializer

value-serializer: org.springframework.kafka.support.serializer.JsonSerializerProducer Component

import lombok.Data;

import lombok.RequiredArgsConstructor;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.stereotype.Component;

@Slf4j

@Component

@RequiredArgsConstructor

public class KafkaProducer {

public static final String TOPIC = "replace with your topic name";

private final KafkaTemplate<String, MyEvent> kafkaTemplate;

public void sendFlightEvent(MyEvent event){

String key = event.getKey();

kafkaTemplate.send(TOPIC, key , event);

log.info("Producer produced the message {}", event);

// write your handlers and post-processing logic, based on your use case

}

@Data

class MyEvent {

private String key;

// other variables state, based on your use case

}

}

Consumer Component

import lombok.Data;

import lombok.extern.slf4j.Slf4j;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.stereotype.Component;

@Slf4j

@Component

public class KafkaConsumer {

@KafkaListener(topics = "replace with your topic name", groupId = "replace with your group id ")

public void flightEventConsumer(MyEvent message) {

log.info("Consumer consume Kafka message -> {}", message);

// write your handlers and post-processing logic, based on your use case

}

@Data

class MyEvent {

private String key;

// other variables state, based on your use case

}

}

“We can use Stream cloud function as well to enhance code base in regards to functional programming components for producer and consumer”

References for Stream Cloud Function

Add Comment

You must be logged in to post a comment.

נערות ליווי

Itís nearly impossible to find well-informed people for this topic, but you seem like you know what youíre talking about! Thanks