Beginner’s Guide to Machine Learning vs Deep learning

1.Working The field of computer science known as Artificial Intelligence (AI) is concerned with developing systems that can carry out tasks

Why Is the Tech World Excited About Memristor Circuits?

Memristor Circuits are a new kind of electronic part. They could change how computers are built. The word "memristor" mixes

Polyfunctional Robots: A New Era of Intelligent Automation

Understanding Polyfunctional RobotsIn the ever-evolving landscape of robotics, polyfunctional robots have emerged as a groundbreaking innovation. These robots are designed

Deep Learning: The Next Step in Machine Learning

In our previous blog, "Machine Learning 101: A Beginner’s Handbook," we laid the groundwork by explaining the fundamental concepts of

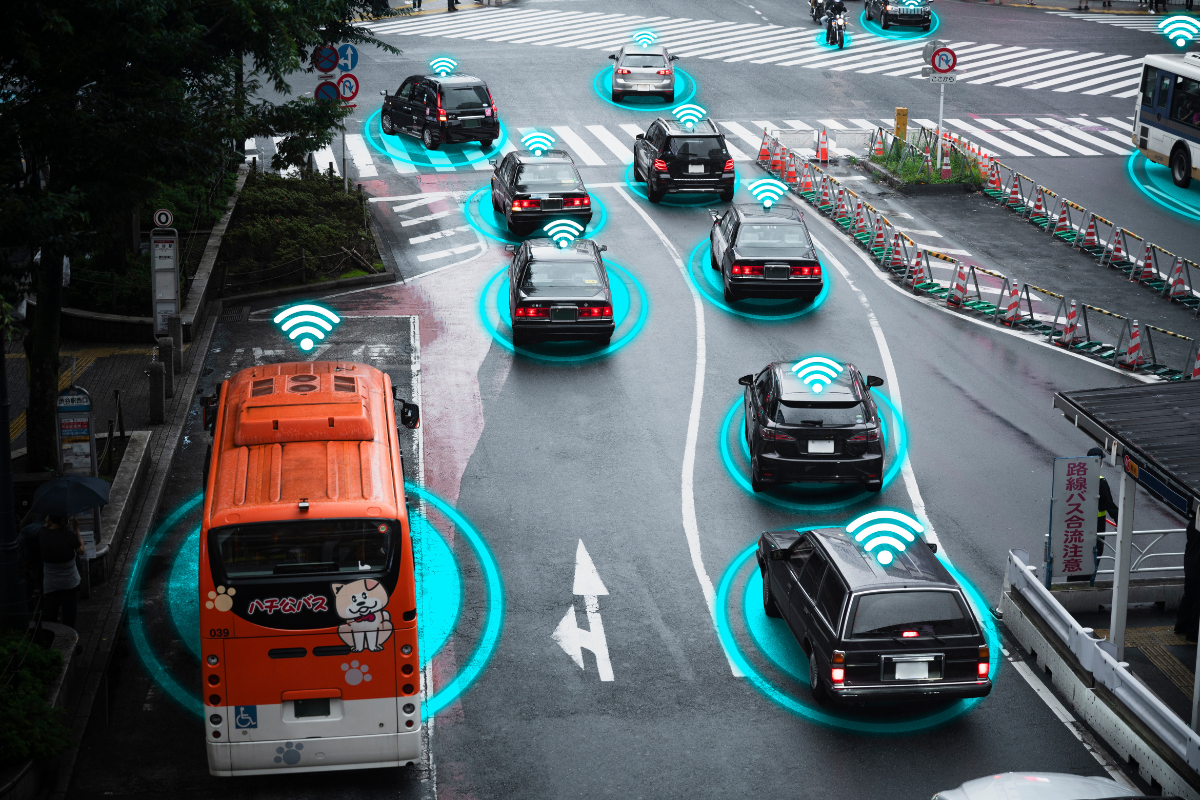

Driving into the AI Future: The Integration of AI in Automobiles

In today's rapidly evolving technological landscape, the automotive industry stands at the forefront of innovation. From electric vehicles to self-driving

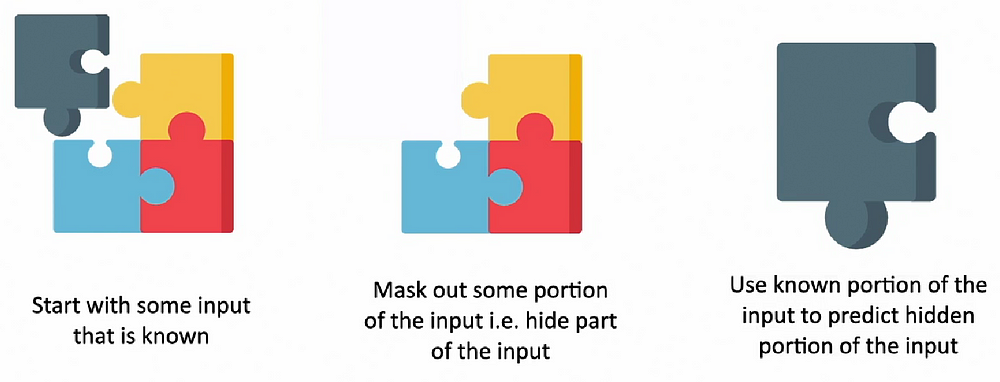

Self-Supervised Learning — A Comprehensive Introduction

Notes: For more articles on Generative AI, LLMs, RAG etc, one can visit the “Generative AI Series.” For such library of

New Tech ALERT: Introducing AutoGen, Revolutionizing AI Collaboration

AutoGen, a ground-breaking project by Microsoft, introduces a paradigm shift in AI collaboration. This innovative framework allows the creation and

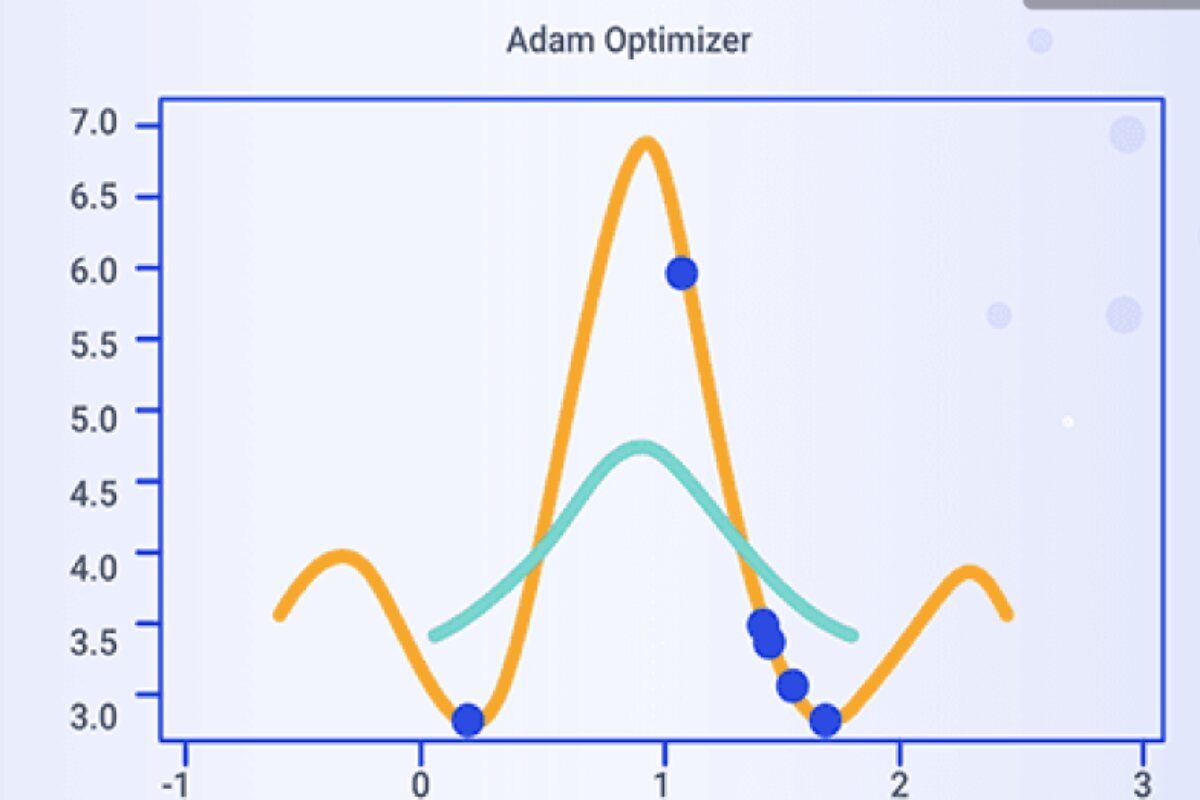

Adam: Efficient Deep Learning Optimization

Adam (Adaptive Moment Estimation) is an optimization algorithm commonly used for training machine learning models, particularly deep neural networks. It

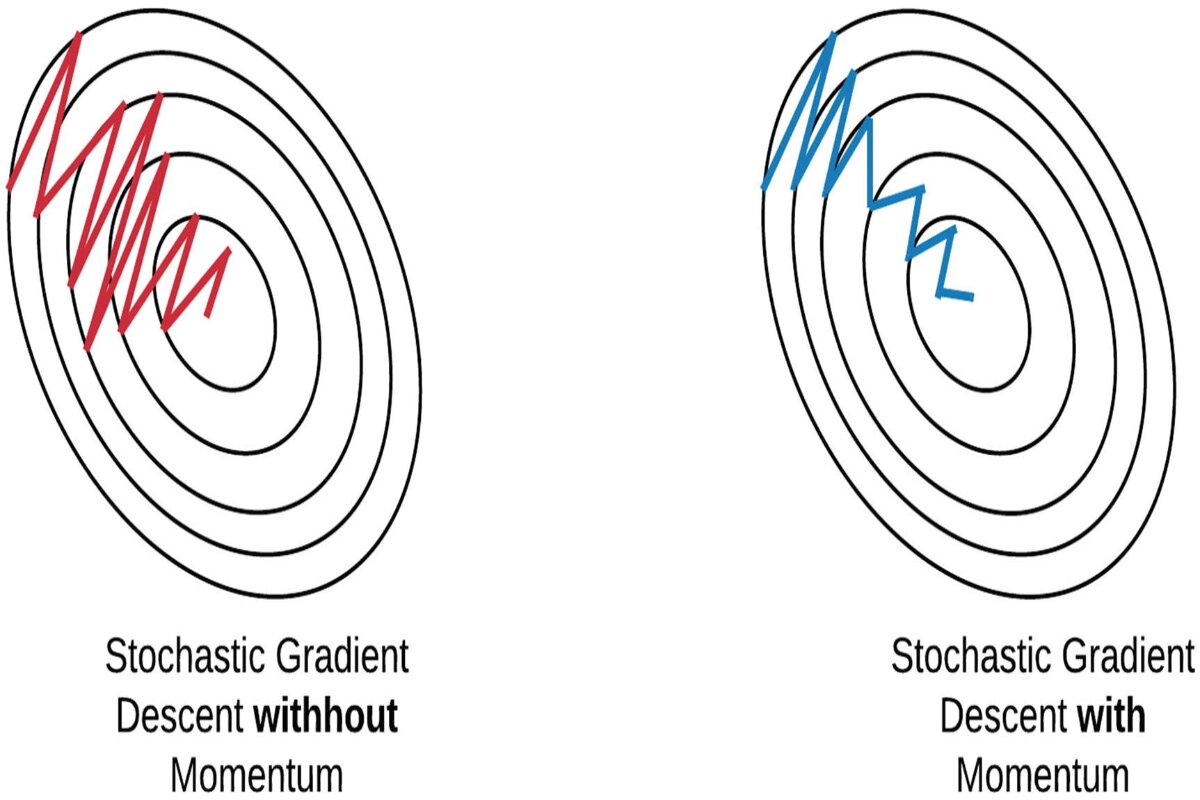

Accelerate Convergence: Mini-batch Momentum in Deep Learning

Imagine you're climbing a hill, and you want to find the quickest way to reach the top. There are different

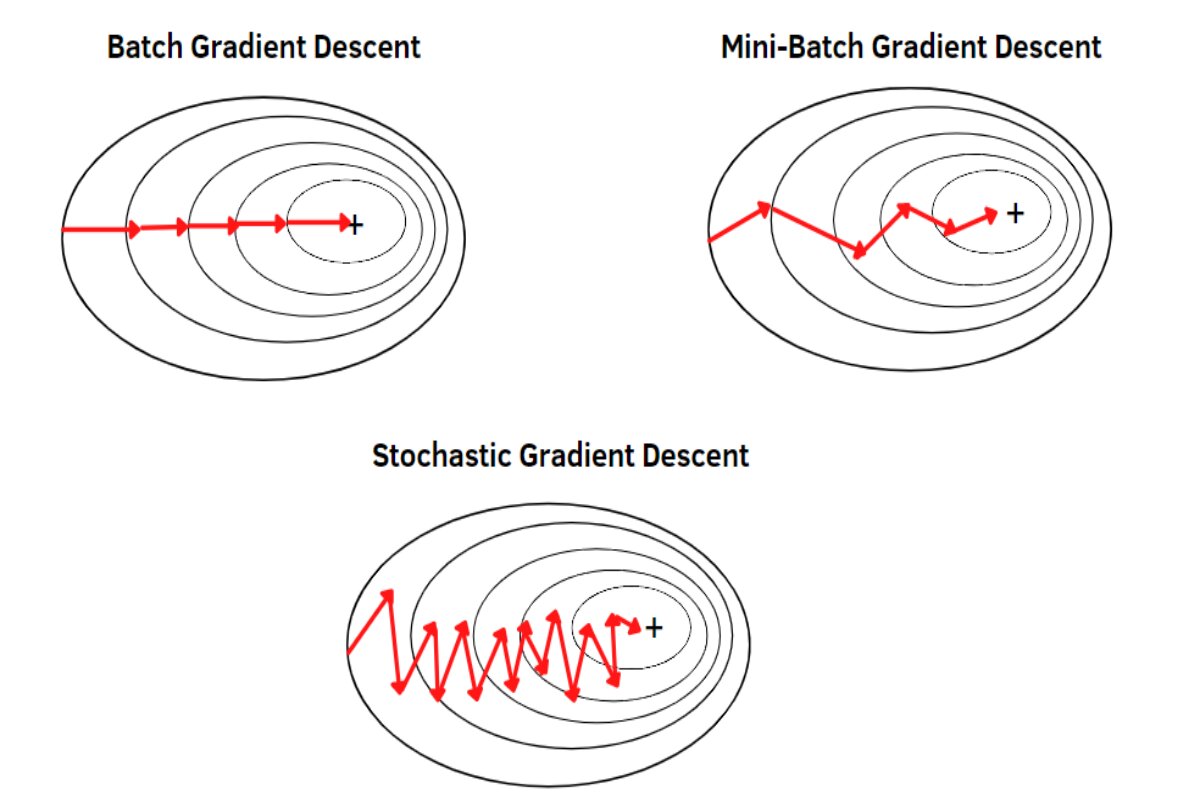

EfficientDL: Mini-batch Gradient Descent Explained

Mini-batch Gradient Descent is a compromise between Batch Gradient Descent (BGD) and Stochastic Gradient Descent (SGD). It involves updating the