BlazingText : An optimized Word2vec and Text classification algorithm

BlazingText, an Amazon SageMaker algorithm, is a highly optimised Word2vec and Text Classification algorithm.

Word2vec algorithm maps each word to high-quality distributed vectors using a neural networks model . The resulting vector representation of a word is called a word embedding. If two words are semantically similar then their vectors will also be close to each other(in n-dimesional plane), this is how word embeddings capture the semantic relationships between the words. Word2vec algorithm is useful for NLP tasks, such as sentiment analysis, recommendation systems, named entity recognition, web translation service etc.

Text classification algorithms assigns a set of pre-defined categories to open-ended text. It can be used to organise and categorise almost all kind of text. Some applications of Text Classification is to perform web searches, information retrieval, ranking, and document classification.

So, BlazingText can be used for Supervised(Text Classification) and Unsupervised(Word2vec) learning both, for Natural Language Processing(NLP) tasks.

BlazingText vs Other Word2vec Algorithms

The problem we normally faced with most of the implementations of Word2vec algorithm is that, they are not optimized for multi-core CPU architectures and hence it becomes very difficult to scale to large datasets. Some implementations of Word2vec have also attempted to leverage GPU parallelization but at the cost of accuracy and scalability (including the original C implementation by Mikolov et al. and FastText by Facebook).

With the BlazingText algorithm, we can scale to large datasets easily. We can train a model on more than a billion words in a couple of minutes using a multi-core CPU or a GPU. Also, we achieve performance on par with the state-of-the-art deep learning text classification algorithms.

Features of BlazingText

- BlazingText makes use of GPU acceleration with custom CUDA kernels.

- It can achieve a training speed of up to 43M words/sec on 8 GPUs, which is a 9x speedup over 8-threaded CPU implementations, with minimal effect on the quality of the embeddings.

- BlazingText is also very good in generating meaningful vectors for out-of-vocabulary(OOV) words by representing their vectors as the sum of the character n-gram(subword) vectors

- We can use any of the training architectures between Skip-gram and continuous bag-of-words (CBOW), as per our requirement (similar to other Word2vec algorithms).

- In addition to skip-gram and CBOW, BlazingText also provide one more mode i.e. batch_skipgram, which is very effective for faster training and distributed computation across multiple CPU modes. It will create mini-batches using the Negative Sample Sharing strategy to convert level-1 BLAS(Basic Linear Algebra Subprograms) operations into level-3 BLAS operations. This efficiently leverages the multiply-add instructions of modern architectures.

Input to the BlazingText

BlazingText expects a space-separated tokens file, each line in the file should contain a single sentence. We can only have one file for input, if you need to train on multiple text files, concatenate them into one file and upload the file in the respective channel.

For Word2Vec training, we need to upload the file under the train channel. The file should contain a training sentence per line.

For Text Classification(supervised) training, we can train with file mode or with the augmented manifest text format.

Model Training

File Mode

For supervised mode, the training/validation file should contain a training sentence per line along with the labels. Labels are words that are prefixed by the string __label__.

Here is an example of a training/validation file:

- __label__1 linux ready for prime time , intel says , despite all the linux hype , the open-source movement has yet to make a huge splash in the desktop market . that may be about to change , thanks to chipmaking giant intel corp .

- __label__2 bowled by the slower one again , kolkata , november 14 the past caught up with sourav ganguly as the indian skippers return to international cricket was short lived .

Augmented Manifest Text Format

The supervised mode also supports the augmented manifest format, which enables you to do training in pipe mode without needing to create RecordIO files. While using the format, an S3 manifest file needs to be generated that contains the list of sentences and their corresponding labels.

- {“source”:”linux ready for prime time , intel says , despite all the linux hype”, “label”:1}

- {“source”:”bowled by the slower one again , kolkata , november 14 the past caught up with sourav ganguly”, “label”:2}

Model Artifacts and Inference

Text Classification Algorithm

Training with supervised outputs creates a model.bin file that can be consumed by BlazingText hosting. For inference, the BlazingText model accepts a JSON file containing a list of sentences and returns a list of corresponding predicted labels and probability scores. Each sentence is expected to be a string with space-separated tokens, words, or both.

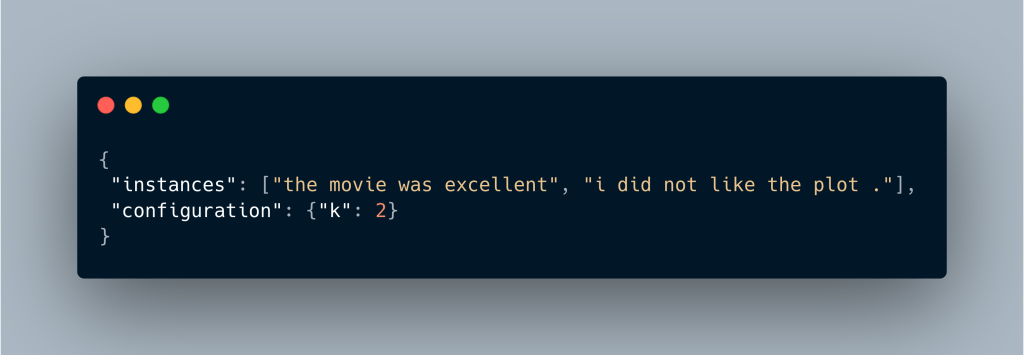

Sample JSON Request

Mime-type: application/json

By default, the server returns only one prediction, the one with the highest probability. For retrieving the top k predictions, you can set k in the configuration, as follows:

Word2vec Algorithm

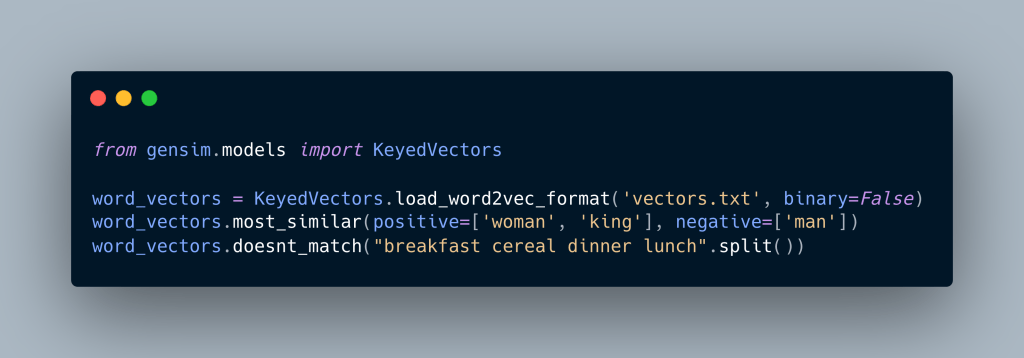

For Word2Vec training, the model artifacts consist of vectors.txt, which contains words-to-vectors mapping, and vectors.bin, a binary used by BlazingText for hosting, inference, or both. vectors.txt stores the vectors in a format that is compatible with other tools like Gensim and Spacy.

If the evaluation parameter is set to True, an additional file, eval.json, is created. This file contains the similarity evaluation results (using Spearman’s rank correlation coefficients) on WS-353 dataset. The number of words from the WS-353 dataset that aren’t there in the training corpus are reported.

For inference requests, the model accepts a JSON file containing a list of strings and returns a list of vectors. If the word is not found in vocabulary, inference returns a vector of zeros. If subwords is set to True during training, the model is able to generate vectors for out-of-vocabulary (OOV) words.

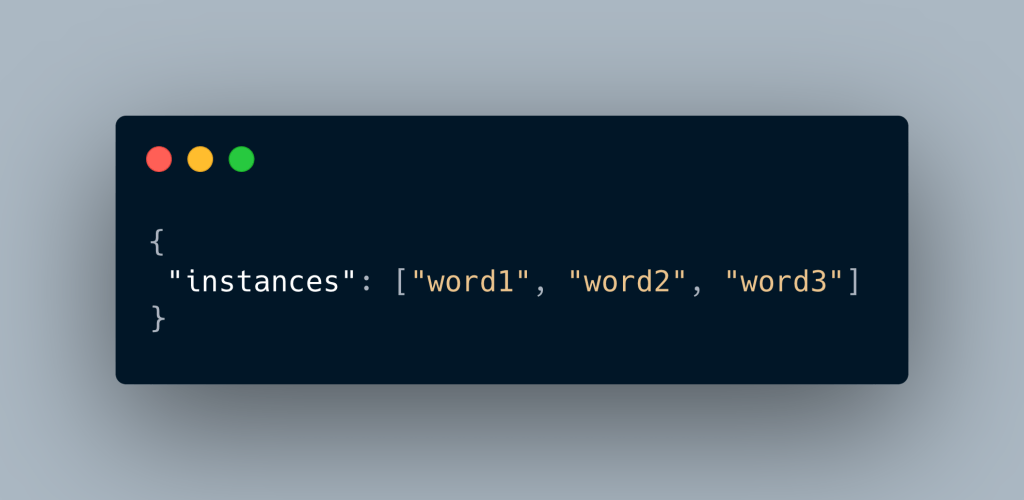

Sample JSON Request

Mime-type: application/json

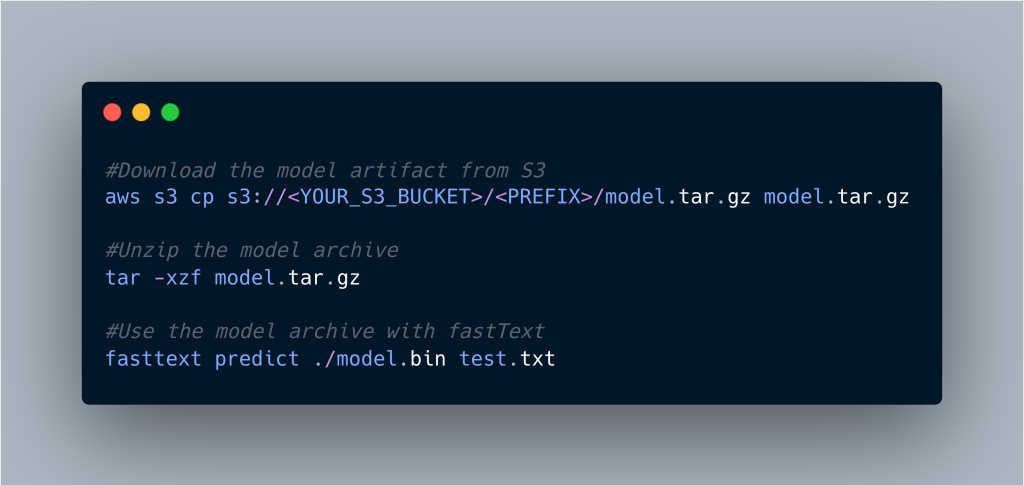

For both supervised (text classification) and unsupervised (Word2Vec) modes, the binaries (*.bin) produced by BlazingText can be cross-consumed by fastText and vice versa. You can use binaries produced by BlazingText by fastText. Likewise, you can host the model binaries created with fastText using BlazingText.

Metrics generated by BlazingText

The BlazingText Word2Vec algorithm (skipgram, cbow, and batch_skipgram modes) reports on a single metric during training: train:mean_rho(Spearman’s rank correlation coefficients). This metric is computed on WS-353 word similarity datasets. When tuning the hyperparameter values for the Word2Vec algorithm, use this metric as the objective.

The BlazingText Text Classification algorithm (supervised mode), also reports on a single metric during training: the validation:accuracy. When tuning the hyperparameter values for the text classification algorithm, use these metrics as the objective.

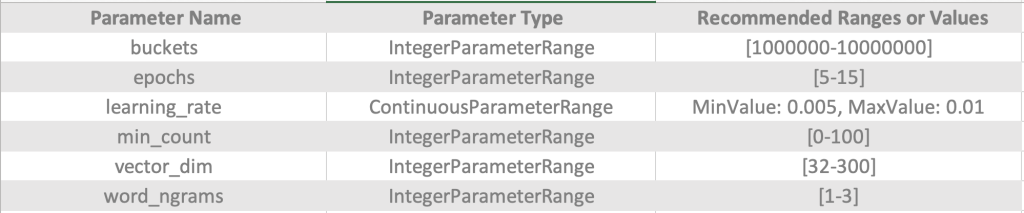

Hyperparameter Tuning for the Text Classification Algorithm

We can tune an Amazon SageMaker BlazingText text classification model with the following hyperparameters.

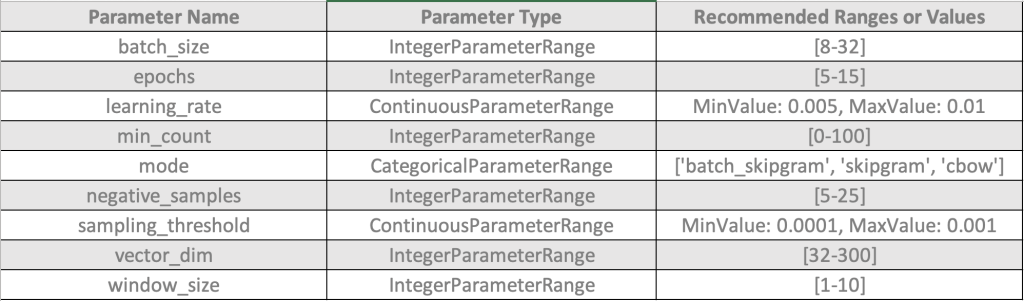

Hyperparameter Tuning for the Word2vec Algorithm

We can tune an Amazon SageMaker BlazingText Word2Vec model with the following hyperparameters. The hyperparameters that have the greatest impact on Word2Vec objective metrics are: mode, learning_rate, window_size, vector_dim, and negative_samples.

We need to perform the hyperparameter running on the sample dataset.

In some of the use cases, mean_rho might not be the right parameter to judge the Word2vec model performance, so it’s better we calculate the model performance on our test dataset. We can locally load all the models from S3 location(generated during hyperparameter tuning) in fastText model and see how the model is performing on our dataset and see what particular combination of hyperparamters are working for our use case/dataset.

Implementation References

For Implementation of text classification we can refer this link : https://github.com/aws/amazon-sagemaker-examples/blob/main/introduction_to_amazon_algorithms/blazingtext_text_classification_dbpedia/blazingtext_text_classification_dbpedia.ipynb

For implementation of Word2vec we can refer this link : https://github.com/aws/amazon-sagemaker-examples/blob/main/introduction_to_amazon_algorithms/blazingtext_word2vec_text8/blazingtext_word2vec_text8.ipynb

References

https://docs.aws.amazon.com/sagemaker/latest/dg/blazingtext.html

https://docs.aws.amazon.com/sagemaker/latest/dg/blazingtext-tuning.html

Add Comment

You must be logged in to post a comment.