WHAT IS A Delta Table

Delta Lake is an open-source data lake management tool that provides reliability, performance, and scalability on top of Apache Spark. It is designed to enhance data lake workflows and provide robust data management capabilities. In this blog, we will explore Delta Tables in PySpark, a Python library for Apache Spark, and understand its key features, benefits, and use cases.

What are Delta Tables?

Delta Tables are a type of table that can be created in Delta Lake, which is an extension of Apache Parquet file format. Delta Lake provides a set of enhancements over traditional Parquet files, including transactional capabilities, schema evolution, and time travel, making it a powerful solution for managing big data workloads. Delta Tables allow you to create, read, update, and delete data in a transactionally consistent manner, making them ideal for building scalable data pipelines and managing large datasets.

Key Features of Delta Tables

ACID Transactions: Delta Tables support ACID (Atomicity, Consistency, Isolation, Durability) transactions, which ensures that data modifications are made in an all-or-nothing manner. This makes Delta Tables suitable for concurrent write operations from multiple users or applications, ensuring data integrity and consistency.

Schema Evolution: Delta Tables support schema evolution, allowing you to evolve the schema of your data over time without losing any existing data. You can add, modify, or delete columns in a Delta Table, making it flexible and adaptable to changing business requirements.

Time Travel: Delta Tables provide a time travel feature that allows you to access previous versions of your data, making it easy to perform historical analysis and recover data from previous states. You can query a Delta Table as it existed at a specific point in time, enabling you to analyze changes and trends over time.

Optimized Data Management: Delta Tables optimize data storage by leveraging features like Z-ordering and data skipping, which improve query performance and reduce data scan times. Delta Tables also support automatic file and partition management, ensuring efficient data storage and retrieval.

Data Lifecycle Management: Delta Tables provide features for data lifecycle management, including data retention policies and automated data cleanup, making it easy to manage and control the lifecycle of your data in a Delta Lake.

Benefits of Delta Tables

Reliability: Delta Tables provide ACID transactions and time travel capabilities, ensuring data integrity and consistency, and making it reliable for mission-critical data workloads.

Scalability: Delta Tables are designed to scale horizontally, making them suitable for processing large datasets in distributed computing environments like Apache Spark.

Performance: Delta Tables optimize data storage and retrieval, enabling faster query performance and reduced data scan times, resulting in improved overall performance of data processing pipelines.

Flexibility: Delta Tables support schema evolution, allowing you to modify your data schema without losing any existing data, making it flexible and adaptable to changing business requirements.

Use Cases of Delta Tables

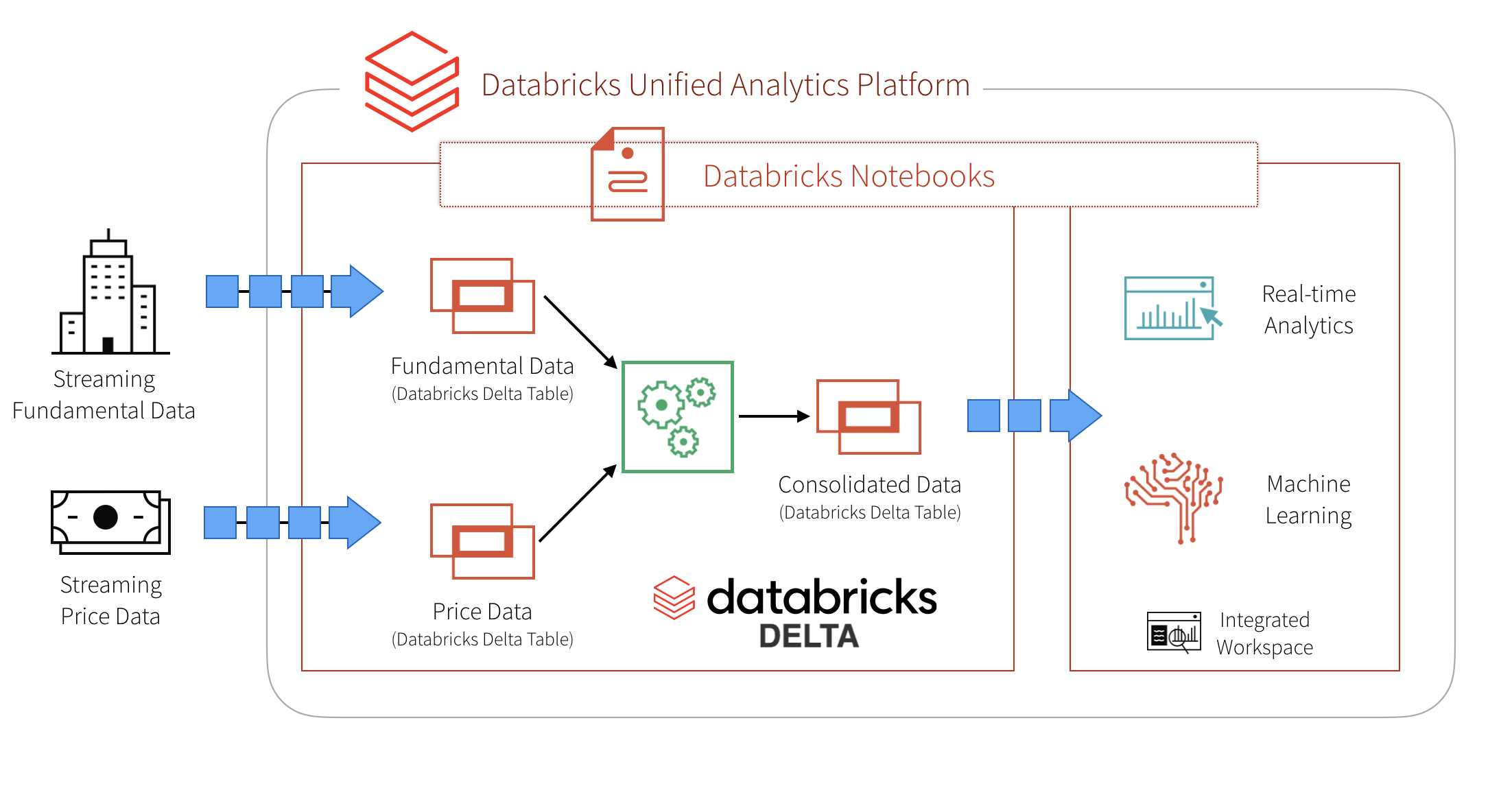

Data Pipelines: Delta Tables are ideal for building data pipelines that require ingesting, processing, and managing large volumes of data in real-time or batch processing scenarios. Delta Tables provide transactional capabilities, schema evolution, and time travel, making them suitable for building reliable and scalable data pipelines.

Data Lake Management: Delta Tables can be used to manage data lakes, which are large repositories of data stored in a distributed computing environment. Delta Tables provide robust data management capabilities, including ACID transactions and time travel, making them suitable for managing large datasets in data lake architectures.

Data Warehousing: Delta Tables can be used as a scalable and performant data storage solution for data warehousing use cases. Delta Tables provide schema evolution, time travel, and optimized data storage, making them suitable for storing and processing large volumes of data in a data warehousing environment.

IllustrationsExample 1: Creating a Delta Table

You can create a Delta Table in PySpark by specifying the Delta format when reading data from a source file, such as a CSV or a Parquet file. Here's an example of how you can create a Delta Table from a CSV file:

python

Copy code

from pyspark.sql import SparkSession

# Create a Spark session

spark = SparkSession.builder.appName("DeltaTableExample").getOrCreate()

# Read data from a CSV file

df = spark.read.csv("data.csv", header=True, inferSchema=True)

# Write data to a Delta Table

df.write.format("delta").save("delta_table")

In this example, we read data from a CSV file and then write it to a Delta Table using the delta format.

Example 2: Querying a Delta Table

Once you have a Delta Table, you can query it using standard SQL-like syntax in PySpark. Here’s an example of how you can query a Delta Table:

python

Copy code

from pyspark.sql import SparkSession

# Create a Spark session

spark = SparkSession.builder.appName(“DeltaTableQueryExample”).getOrCreate()

# Read data from a Delta Table

df = spark.read.format(“delta”).load(“delta_table”)

# Perform a query on the Delta Table

result = df.select(“name”, “age”).filter(“age >= 18”).show()

In this example, we read data from a Delta Table using the format method with “delta” as the format, and then perform a query using the select and filter methods to retrieve specific columns and apply a filter condition.

Example 3: Updating a Delta Table

One of the powerful features of Delta Tables is the ability to perform updates in a transactionally consistent manner. Here’s an example of how you can update a Delta Table:

python

Copy code

from pyspark.sql import SparkSession

# Create a Spark session

spark = SparkSession.builder.appName(“DeltaTableUpdateExample”).getOrCreate()

# Read data from a Delta Table

df = spark.read.format(“delta”).load(“delta_table”)

# Perform an update on the Delta Table

df = df.filter(“age >= 18”)

df = df.withColumn(“age”, df[“age”] + 1)

# Write updated data back to the Delta Table

df.write.format(“delta”).mode(“overwrite”).save(“delta_table”)

In this example, we read data from a Delta Table, perform an update on the data by filtering and modifying the “age” column, and then write the updated data back to the Delta Table using the mode option set to “overwrite”.

Example 4: Time Travel in Delta Table

Delta Tables provide a powerful time travel feature that allows you to access previous versions of your data. Here’s an example of how you can use time travel in a Delta Table:

python

Copy code

from pyspark.sql import SparkSession

# Create a Spark session

spark = SparkSession.builder.appName(“DeltaTableTimeTravelExample”).getOrCreate()

# Read data from a Delta Table at a specific version

df = spark.read.format(“delta”).option(“versionAsOf”, 0).load(“delta_table”)

# Perform analysis on the previous version of the data

result = df.groupBy(“age”).agg({“salary”: “avg”}).show()

In this example, we use the option method with “versionAsOf” to specify the version of the Delta Table that we want to access, and then perform analysis on that previous version of the data.

Add Comment

You must be logged in to post a comment.