Managing Microservices with a Service Mesh in Kubernetes

Evolution of Cloud-native applications

With the advent of microservice-based architecture, the deployment of mass monoliths into microservices has become feasible and flexible to developers. This resulted in an increase in productivity and speed with improved communication within teams. But with every new enhancement, new challenges emerge. Cloud-native applications are architected mostly as a constellation of distributed microservices, which are running on Containers. Due to the variety of these services existing within a large system, load balancing, security and monitoring must now be implemented for each service. These applications are spread across multiple data centers, servers or continents, which makes them highly network dependent. Most organizations using microservice architecture do not fully understand the impact until they are well on their way to a microservice sprawl.

A Service mesh manages network traffic between services by controlling traffic routing rules and the dynamic direction of packages between services.

What exactly is a service mesh?

Service meshes have been around prior to Kubernetes clusters, but they became immensely popular after the advancement of microservices built on Kubernetes. Since microservices are heavily reliant on network, a service mesh manages to network the traffic between services. It does that in a much more graceful and scalable way compared to what would require a lot of manual, error-prone work and operational burden which might not be sustainable in the long-run.

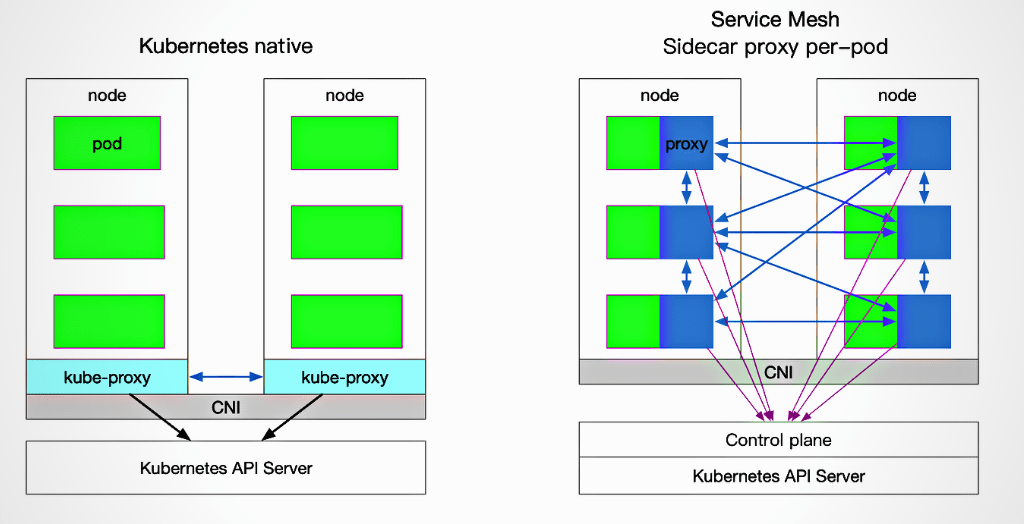

Kubernetes vs Service Mesh

Kubernetes comes into picture when we essentially want to manage lifecycle of an application with declarative configuration & deployments with little control over services. Meanwhile a service mesh is required when we want to connect, control, observe and protect microservices & provide security management, observability & inter-application traffic routing. For example – If we have already deployed a stable application, how to route traffic between services. How to route specific amount of traffic between services? How do we route traffic if our traffic is a mix of HTTP, TCP, gRPC, and database protocols?

Kubernetes was created with a cloud-native mindset. However, with a cloud-native perspective, microservice architecture came into play, as did the requirement to ensure SLAs as the number of services grew. Hence Service meshes were established to eliminate the time-consuming tasks of service discovery, monitoring, and distributed tracing, among other things. A service mesh pushes microservices’ standard functionality down to the infrastructure layer, allowing developers to focus more on business logic and speeding up service delivery, in line with the cloud-native philosophy.

A service mesh is a dedicated infrastructure layer for communication between services. It’s also in charge of delivering requests via a complicated architecture of services that make up a modern, cloud-native app. Each service in the app will have its own proxy service, and the “Service Mesh” will be made up by all these proxy services. The mesh proxies will handle all the requests to and from each service. Service meshes are intended to address the difficulties that developers have while communicating with remote end points. As indicated in the diagram, proxies are also known as sidecars. A service mesh allows connectivity, monitoring & security to your microservices

- Connectivity: Service Mesh enables service discovery and communication within services. It enables routing to control the flow of traffic and API calls between services/endpoints. These also enable deployment strategies such as blue/green, canaries or rolling upgrades, and more.

- Security: Service Mesh allows secure communication between services. A service mesh can also enforce policies to allow or deny communication. For example, you can configure a policy to prevent a service in the development environment from accessing production services.

- Monitoring: A service mesh often integrates with monitoring and tracing tools such as Prometheus and Jaeger while working with Kubernetes to allow discovery and visualisation of dependencies between services, traffic flow, API latencies, and tracing.

Some Common Service Mesh Options

There are many contenders in the market but the most popular ones are Istio, Linkerd & Consul Connect. Although all of these service mesh solutions are opensource, employing anyone of them can help your DevOps teams succeed as they construct more and more microservices.

Consul Connect

Consul is a service management framework that gained service discovery capabilities with the addition of Connect in version 1.2, making it a complete Service Mesh. HashiCorp’s infrastructure management package includes Consul. It began as a mechanism to manage services running on Nomad and has now expanded to include support for a variety of other data centre and container management platforms, including Kubernetes.

Consul Connect uses a DaemonSet agent installed on every node which communicates with Envoy sidecar proxies. These sidecar proxies handle routing & traffic forwarding.

Istio

Istio is a Kubernetes-native solution that was first launched by Lyft, and is now backed by a significant number of prominent IT organisations as their preferred service mesh. Istio is the default service mesh opted by organizations such as Google, IBM, and Microsoft in their respective Kubernetes cloud services environment. Istio was the first to offer advanced features such as deep-dive analytics.

Istio’s data and control planes are separated by a sidecar loaded proxy that caches information so it doesn’t have to go back to the control plane for every call. The control planes are pods that run in the Kubernetes cluster, allowing greater resilience in the case that a single pod in any portion of the service mesh fails.

Linkerd

Linkerd is the second most popular service mesh on Kubernetes, and its architecture closely resembles Istio’s, with an initial focus on simplicity rather than flexibility, thanks to its rewriting in v2. This, combined with the fact that it is a Kubernetes-only solution, results in fewer moving parts, reducing Linkerd’s total complexity.

Although Linkerd v1.x is still supported and supports more container platforms than Kubernetes, new features (such as blue/green deployments) are mostly focused in v2. Linkerd is also a part of the Cloud Native Foundation (CNCF), which is the organization responsible for Kubernetes.

Epilogue

It’s worth noting that the transition from monolithic applications to microservices, or from VMs to Kubernetes-based apps, is less disruptive. Most services are unaware that they are running as a mesh because most meshes employ the sidecar model. However, switching from one service mesh to another is difficult, especially if you want to use the service mesh as a scalable solution for all of your services.

Istio is quickly becoming the de facto standard for Kubernetes service mesh. It is the most advanced, but it is also the most difficult to implement & master. Service Mesh is beneficial for any form of microservices architecture from an operations standpoint because it allows you to regulate traffic, security, permissions, and observability.

Service Mesh use cases

By starting with these, you may begin to standardise Service Mesh in your system design, laying the foundation and crucial components for future large-scale operations. Some common areas where service meshes fit in are listed below.

- Adding service-level visibility, tracing, and monitoring capabilities to distributed services to improve observability. Some of service mesh’s primary features significantly improve visibility and your ability to troubleshoot and minimise situations. For example, if one of the architecture’s services becomes a bottleneck with failing services, the typical solution is to retry it, however this can exacerbate leading to timeouts. You may quickly break the circuit to failing services with service mesh to stop non-working replicas and maintain the API responsive.

- With traffic control capabilities, service mesh enables you to use Blue/Green deployments to reliably roll out new applications upgrades without disrupting service. You start by exposing the new version to a small group of users, test it, and then roll it out to all instances in Production.

- With the ability to inject delays and faults to improve the robustness of deployments, chaos monkey/testing in production circumstances is also possible.

- You can utilise service mesh as a ‘bridge’ when decomposing your apps to Kubernetes-based microservices if you’re in the midst of upgrading your existing applications to Kubernetes-based microservices. You can register your existing applications as ‘services’ in the Istio service catalogue and then gradually migrate them to Kubernetes without changing the mechanism of communication between them – similar to how a DNS router works. This scenario is comparable to how Service Directory is used.

- If you believe in the idea of service mesh and want to begin rolling it out, but don’t yet have Kubernetes applications up and running, you may have your Operations team begin learning the ropes of using service mesh by deploying it merely to track API consumption.

In its most advanced form, Service Mesh becomes the microservices architecture dashboard. It’s where you’ll go to troubleshoot problems, enforce traffic restrictions, set rate limitations, and try out new code. It’s your central location for tracing, monitoring, and controlling the interactions between all of your services, including how they’re connected, performing, and being secured.

Add Comment

You must be logged in to post a comment.

Rishabh Srivastava

Glad you liked it..

gate io para yatırma

Thank you very much for sharing. Your article was very helpful for me to build a paper on gate.io. After reading your article, I think the idea is very good and the creative techniques are also very innovative. However, I have some different opinions, and I will continue to follow your reply.