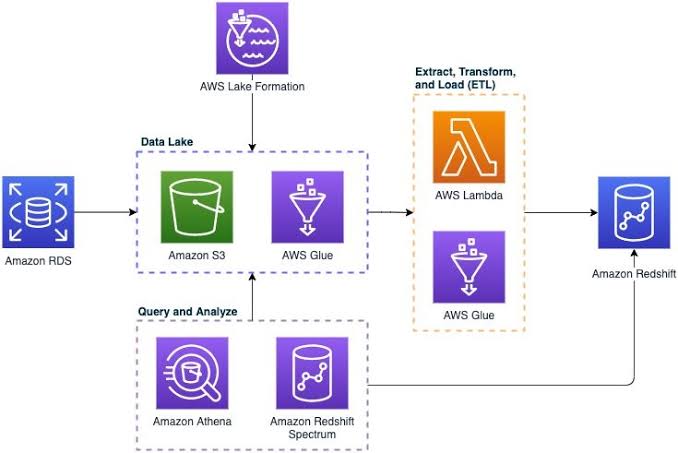

Loading data from datalake to Redshift Warehouse

Data Lake:

A data lake is a centralized repository designed to store, process, and secure large amounts of structured, semi structured, and unstructured data.

NOTE: Assuming already have S3 bucket created with IAM roles.

For this we need to create the AWS Redshift cluster, the steps are as follows:

- Create AWS Redshift Cluster:

Go to AWS Redshift -> Clusters -> Create cluster

Note: We can change node type dc2.large as per our requirements.

Note: Admin user name & password must be remembered.

Note: IAM role have complete access to S3,Glue and redshift.

• Creating 3rd party Redshift connection on locally:

Once it is saved. Create a table locally with the schema as we uploaded data in S3.

A table is created.

• Creating AWS Glue connection with Local & AWS Redshift Cluster:

Inline policies for s3:

{

“Version”: “2012-10-17”,

“Statement”: [

{

“Effect”: “Allow”,

“Action”: [

“s3:GetObject”,

“s3:PutObject”,

“s3:DeleteObject”

],

“Resource”: [

“arn:aws:s3:::redshift60523/*”

]

},

{

“Effect”: “Allow”,

“Action”: [

“s3:GetObject”

],

“Resource”: [

“arn:aws:s3:::redshift60523”

]

}

]

}

Note: IAM role has complete access to S3, Glue, and redshift.

Once the Test Connection is completed, move to the next step to create the ETL Job.

For this we have already put converted data from On-premises Data Sources (RDBMS or flat files) into S3 as CSV file

Note: CSV file should be in clear format along with the header.

Steps

- Open AWS Glue :

Go to AWS Glue -> ETL jobs -> Visual with a blank canvas -> create - Create a Source to bring data from S3 to Glue:

Go to Visual -> Source -> Amazon S3 -> select S3 - Create a target:

Go to Visual -> Target -> Redshift -> select Redshift

Note: Used the above connection which we created above.

Here the table name will appear which we created locally.

Once it save successfully, then run it.

Once it will run successfully, the success message will display. After that we can check in local system that data is loaded or not.

Data is loaded to Redshift for further analysis.

Additional resources:

https://docs.aws.amazon.com/redshift/

https://aws.amazon.com/redshift/