Advancements in NLP: Shaping Future Language Understanding

Introduction to NLP

Artificial intelligence places Natural Language Processing (NLP) at the forefront, making it a dynamic and interdisciplinary field committed to unraveling the intricacies of human language to facilitate effective communication between computers and humans. At its core, NLP endeavors to empower machines not only to comprehend the rich tapestry of human language but also to interpret and generate it with finesse mirroring the complexities of human communication. NLP, rooted in a fusion of linguistics, computer science, and cognitive psychology, analyzes syntax, semantics, and pragmatics—breaking down the grammar, meaning, and contextual nuances inherent in language.

This field relies on advanced machine learning techniques, particularly deep learning, to allow computers to learn and adapt to the diverse patterns and subtleties present in linguistic data. NLP manifests itself in various applications, from developing conversational agents and chatbots engaging in natural dialogue to performing sentiment analysis, language translation, and information extraction. By pushing the boundaries of language understanding, NLP transforms how computers interact with and comprehend natural language, paving the way for more intuitive and seamless human-machine communication.

NLP profoundly and transformative bridges the communication gap between humans and machines. In this context, NLP serves as the technological conduit enabling computers to engage with human language beyond the traditional constraints of coded inputs. Decoding the complexities of human expression, NLP empowers machines to comprehend, interpret, and respond to natural language, fostering a more intuitive and seamless interaction. This capability holds immense value in democratizing the use of sophisticated systems, making technology accessible to individuals without programming expertise.

NLP plays a particularly pivotal role in developing conversational agents, chatbots, and virtual assistants, enabling users to interact with machines through everyday language. This enhances the user experience and broadens the utility of technology across diverse demographics. As the digital landscape evolves, NLP’s significance extends to areas such as sentiment analysis, language translation, and information extraction, influencing customer service, market research, and cross-cultural communication. Essentially, NLP stands as a bridge, translating the nuances of human expression into a language that machines can understand and respond to, thereby redefining the dynamics of human-machine communication.

Fundamental Concepts in NLP

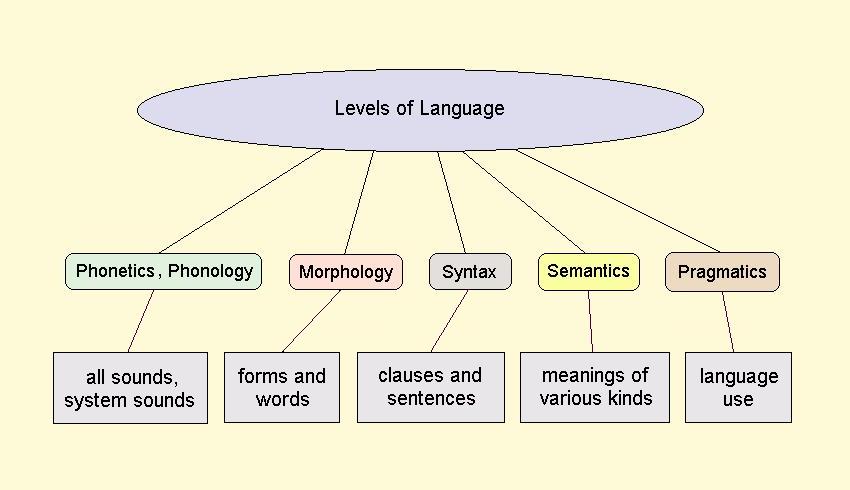

Let’s explore the basics of linguistic analysis, computational linguistics, and their foundational role in Natural Language Processing (NLP). We’ll also delve into key components like syntax, semantics, and pragmatics in the context of NLP.

Linguistic Analysis

Linguistic analysis involves systematically studying language to understand its structure, meaning, and usage. In NLP, it forms the basis for developing algorithms and models that empower machines to process and generate human language. Here are the fundamental aspects:

- Phonetics and Phonology

- Phonetics

- Phonetics is the study of the physical aspects of speech sounds. It encompasses articulation, which is how sounds are physically produced by the vocal tract, acoustics, which examines the properties of sound waves, and auditory perception, which deals with how these sounds are perceived by the human ear.

- Example: In phonetics, researchers analyze how the sound “p” is produced by the lips coming together, the properties of the sound wave it generates, and how we perceive it when spoken.

- Phonetics

- Phonology

- Phonology, on the other hand, deals with the abstract and cognitive aspects of speech sounds. It focuses on phonemes, which are distinctive sound units in a language, and the phonological rules that govern how these sounds are used and combined within a particular language.

- Example: In English phonology, the “p” sound in “pat” and “cat” represents distinct phonemes, and the rules dictate where each can appear in words.

- Syntax

- Syntax entails studying sentence structure and grammar, which involves comprehending how words are organized to construct grammatically correct sentences.

- In NLP, syntax plays a pivotal role in tasks such as parsing (analyzing sentence structure) and discerning word relationships within sentences, including subjects, verbs, and objects.

- Example: In English syntax, we learn that a basic sentence structure often follows a subject-verb-object pattern, such as “The cat (subject) chased (verb) the mouse (object).”

- Semantics

- Semantics delves into the meaning of words, phrases, and sentences, addressing how linguistic elements convey meaning.

- In NLP, understanding semantics entails mapping linguistic elements to their corresponding concepts or representations, facilitating the interpretation of sentence meaning. It is indispensable for tasks such as machine translation and sentiment analysis.

- Example: In semantics, we examine how the word “dog” refers to the concept of a domesticated four-legged animal often kept as a pet.

- Pragmatics

- Pragmatics goes beyond the literal meaning of words and investigates how context, speaker intentions, and social factors shape language interpretation.

- In NLP, pragmatics aids in deciphering implied meanings, indirect speech acts, and context-dependent language use, which is critical for grasping sarcasm, politeness, and humor in text.

- Example: In pragmatics, we consider how the statement “Can you pass the salt?” may imply a polite request even though it is in the form of a question.

Computational Linguistics

Computational linguistics operates at the intersection of linguistics and computer science. It concentrates on crafting computational models and algorithms to automate linguistic analysis and empower machines to effectively process and generate human language. For example, think about voice assistants like Siri or Alexa. These devices use computational linguistics to understand your spoken words and generate responses. When you ask, “What’s the weather like today?” the device uses algorithms to process your question, extract the meaning, and provide a relevant answer based on the data it has access to. This field is all about teaching computers to speak our language, and it’s what makes many of today’s language technologies possible.

Here are the essential components of computational linguistics in the context of NLP:

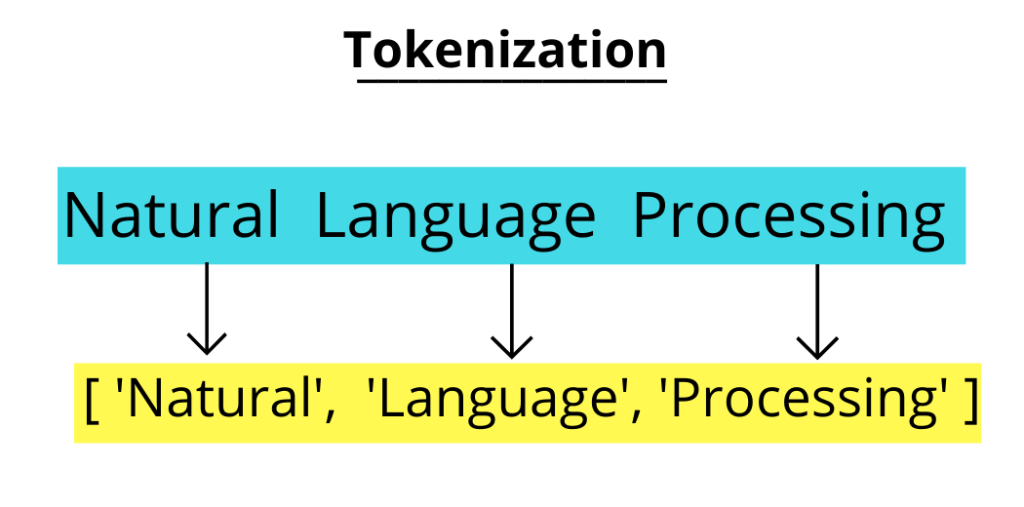

- Tokenization

- Tokenization involves breaking text into smaller units, like words or subword tokens, serving as the preliminary step in NLP to prepare natural language data for analysis.

- Tokenization is like breaking a sentence into individual words. For example, the sentence “I love pizza” would be tokenized into three tokens: “I,” “love,” and “pizza.”

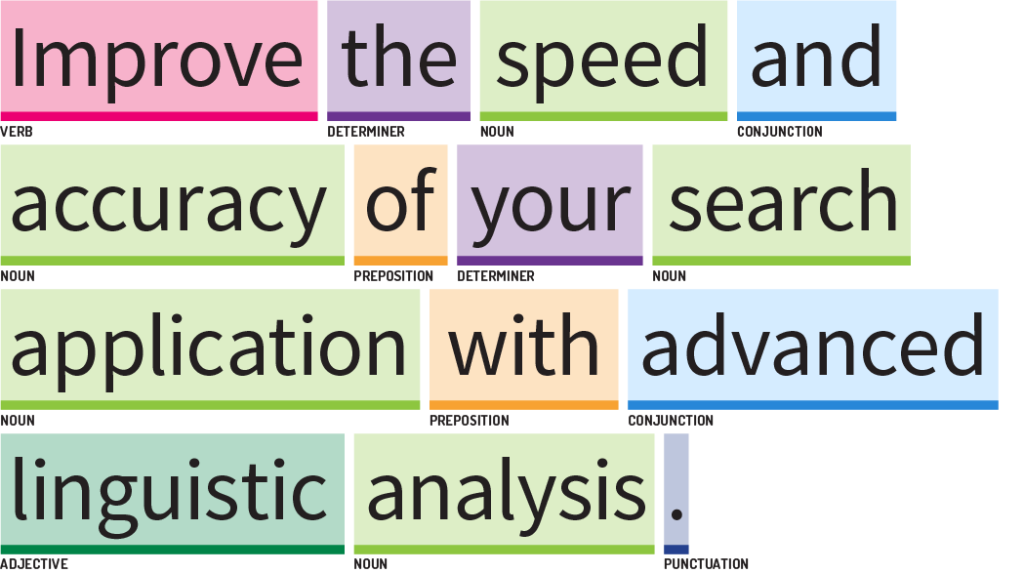

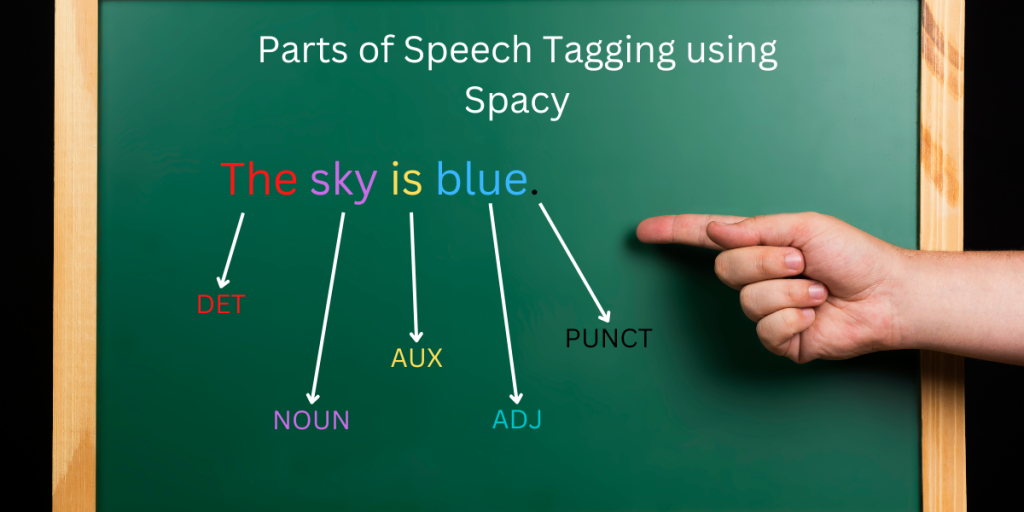

- Part-of-Speech (POS) Tagging

- POS tagging assigns grammatical labels (e.g., noun, verb, adjective) to each word in a sentence. This assists in syntactic analysis and resolving word sense ambiguity.

- Part-of-speech tagging is when we label each word in a sentence with its grammatical role, like a noun or a verb. For instance, in the sentence “The cat sleeps,” “cat” is tagged as a noun, and “sleeps” is tagged as a verb.

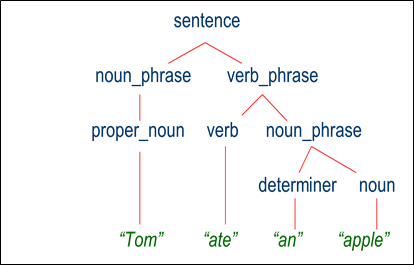

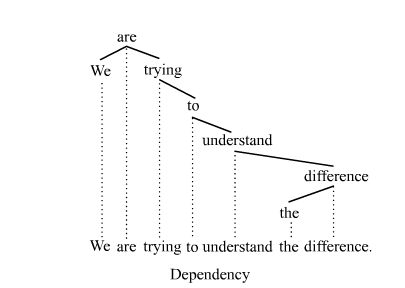

- Parsing

- Parsing encompasses the analysis of the grammatical structure of sentences to generate parse trees or dependency graphs. This process is vital for syntactic analysis and comprehending sentence structure.

- Parsing is about understanding how words in a sentence relate to each other in terms of grammar. It helps create a tree-like structure to show sentence structure. For example, in the sentence “The cat chased the mouse,” parsing would create a structure to show that “cat” is the subject and “chased” is the action.

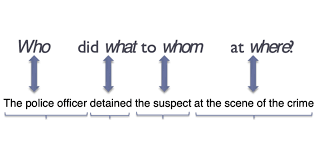

- Semantic Role Labeling

- Semantic role labeling allocates roles (e.g., agent, patient) to words in a sentence, signifying their semantic relationships and aiding in understanding sentence meaning.

- In the sentence “She gave him a book,” semantic role labeling would show that “She” is the giver, “him” is the receiver, and “book” is the thing being given.

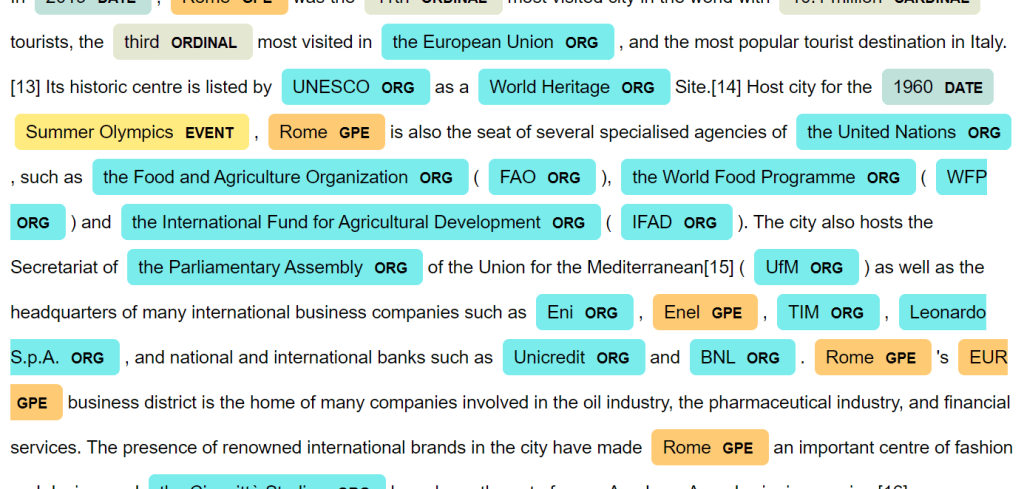

- Named Entity Recognition (NER)

- NER identifies and classifies entities like names of people, organizations, locations, and dates in text, which is pivotal for information extraction.

- For instance, in “John works in New York,” it would recognize “John” as a person and “New York” as a location.

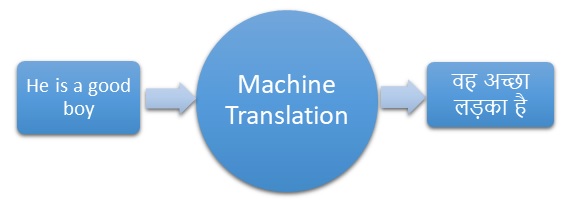

- Machine Translation

- Machine translation employs computational models to translate text from one language to another, considering both syntax and semantics.

- Machine translation helps computers translate text from one language to another, considering both grammar and meaning. For example, it helps translate “Bonjour” (French for “hello”) into “Hello” in English.

- Question Answering

- Question answering systems utilize computational models to extract answers from text based on user-generated questions, encompassing both syntactic and semantic analysis.

- If you ask, “Who wrote Harry Potter?” the system would analyze the text and find “J.K. Rowling” as the answer.

- Sentiment Analysis

- Sentiment analysis determines the emotional tone or sentiment conveyed in a text, relying on semantics and pragmatics.

- Sentiment analysis figures out if the text expresses positive or negative feelings. For example, it can be seen that “I love this movie!” is positive, while “This is terrible” is negative.

–

Challenges

- Ambiguity

- Inherently possesses ambiguity, with words and phrases often having multiple meanings. Resolving this ambiguity represents a significant challenge in NLP.

- Ambiguity in language means words can have more than one meaning. For example, “bank” could mean a riverbank or a place to keep money. This makes NLP tricky.

- Context Dependency

- Language meaning is highly context-dependent, necessitating consideration of surrounding words and sentences for accurate interpretation.

- Language depends on context. Words mean different things based on what’s around them. For example, in “She saw the bat,” the meaning of “bat” depends on whether it’s in a sentence about animals or sports.

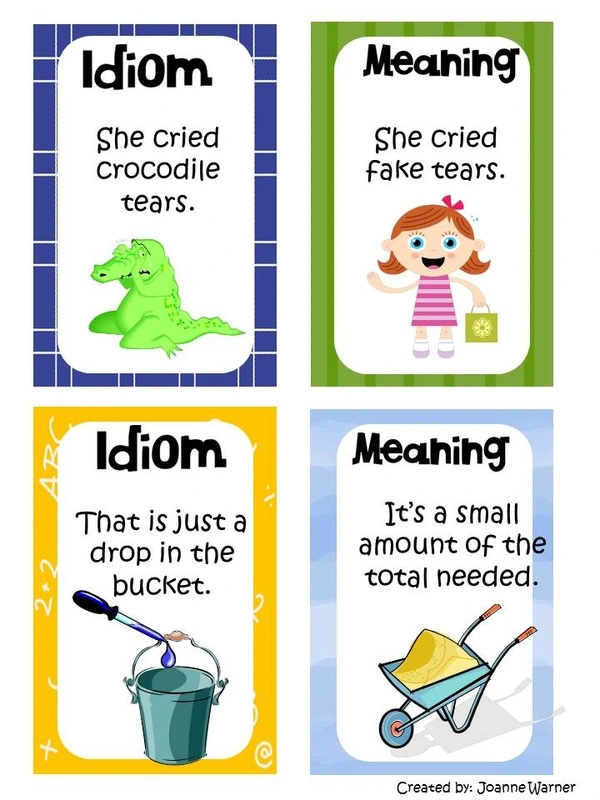

- Idioms and Figurative Language

- Understanding idiomatic expressions, metaphors, and figurative language demands nuanced linguistic analysis.

- Idioms and figurative language are sayings that don’t mean exactly what they say. Like “raining cats and dogs” means heavy rain, not actual animals falling from the sky. NLP needs to understand these too.

- Variability

- Human language displays extensive diversity, including different languages, dialects, and writing styles, making NLP tasks intricate and language-specific.

- Language varies a lot. People speak different languages, use different dialects, and write in various styles. NLP has to deal with all these differences, which can be complicated.

Linguistic analysis and computational linguistics provide the theoretical and practical underpinnings for NLP. Key components such as syntax, semantics, and pragmatics enable NLP systems to analyze, interpret, and generate human language, facilitating applications like machine translation, sentiment analysis, chatbots, and more. The challenges in NLP often stem from the complexity and variability of natural language, necessitating advanced linguistic and computational techniques for resolution.

Machine Learning

Machine learning techniques, particularly deep learning, have transformed Natural Language Processing (NLP) by enabling computers to understand, generate, and manipulate human language. In this deep dive, I will explain how machine learning, including deep learning, drives NLP for tasks such as language modeling and understanding.

1. Machine Learning in NLP: Machine learning is a subfield of artificial intelligence that focuses on developing algorithms and models capable of learning patterns and making predictions or decisions based on data. NLP harnesses machine learning to address various language-related tasks. Deep learning, a subset of machine learning, has gained prominence due to its capacity to handle complex and hierarchical data patterns.

That means Machine Learning is like teaching computers to learn from data and make sense of language. It’s a big part of making computers understand and use human language better. Within machine learning, there’s something called deep learning that’s especially good at handling tricky patterns and information.

2. Language Modeling

- Definition: Language modeling predicts the probability of a sequence of words in a given language. It is crucial for various NLP tasks, including speech recognition, machine translation, and text generation.

- Applications: Language models find application in

- Auto-completion Predicting the next word or phrase while a user types, as observed in search engines and mobile keyboards.

- Speech recognition Converting spoken language into text for voice assistants.

- Machine translation Translating text between languages by modeling word sequence likelihood.

- Text generation Creating coherent, contextually relevant text, is often used in chatbots and content generation.

- Deep Learning Techniques for Language Modeling

- Recurrent Neural Networks (RNNs)

- RNNs are widely employed for language modeling tasks, processing word sequences while maintaining a hidden state that captures context. However, they face challenges like vanishing gradients, limiting their ability to capture long-range dependencies.

- Long Short-Term Memory (LSTM)

- LSTMs, a type of RNN, mitigate the vanishing gradient problem and have improved language modeling by capturing longer-term dependencies.

- Gated Recurrent Units (GRUs)

- GRUs, akin to LSTMs, address the vanishing gradient issue and have been effectively used for language modeling.

- Transformer Models

- Transformers, introduced in the “Attention Is All You Need” paper, represent the state-of-the-art in language modeling. They employ self-attention mechanisms for contextual information capture, efficiently handling long-range dependencies. Models like GPT (Generative Pre-trained Transformer) and BERT (Bidirectional Encoder Representations from Transformers) are built upon the transformer architecture.

- Recurrent Neural Networks (RNNs)

3. Language Understanding

- Definition: Language understanding in NLP involves extracting meaningful information and knowledge from text, going beyond surface-level analysis to comprehend semantics and context.

- Applications: Language understanding is vital in various NLP tasks, including

- Text classification Assigning labels or categories to text documents, e.g., sentiment analysis and spam detection.

- Named Entity Recognition (NER) Identifying and categorizing entities such as names of individuals, organizations, and locations in the text.

- Semantic Role Labeling Assigning roles (e.g., agent, patient) to words in a sentence, indicating their semantic relationships.

- Question answering Extracting answers from text based on user-generated questions.

- Topic modeling Discovering topics within a collection of documents.

- Information retrieval Finding relevant documents or information based on user queries.

- Deep Learning Techniques for Language Understanding

- Convolutional Neural Networks (CNNs) CNNs are effective for text classification tasks, capturing local patterns and features in text data.

- Recurrent Neural Networks (RNNs) RNNs are suitable for sequential tasks like NER and semantic role labeling as they can capture contextual information.

- Transformer Models Transformers, particularly BERT, have revolutionized language understanding tasks. BERT’s bidirectional pre-training enables it to capture rich context and semantics, making it highly effective across a wide range of NLP tasks.

4. Transfer Learning and Pre-trained Models

- A notable advancement in NLP is the utilization of transfer learning and pre-trained language models. These models are trained on extensive text data and subsequently fine-tuned for specific NLP tasks. This approach has significantly reduced the need for large labeled datasets and greatly enhanced task performance.

- Pre-trained models like GPT-3, BERT, and RoBERTa serve as the foundation for many NLP applications. They possess a profound understanding of language, enabling exceptional performance across diverse tasks.

Machine Learning, especially deep learning, has reshaped NLP by enabling language modeling and understanding. These techniques empower machines to work with language data in a manner closer to human comprehension, driving a wide range of applications, from text completion and translation to sentiment analysis and question answering. Pre-trained models, in particular, have pushed the boundaries of NLP capabilities, and the field continues to evolve rapidly.

Chatbots and Conversational Agents

NLP plays a pivotal role in chatbot and conversational agent development, enabling them to engage in human-like interactions. In this deep dive, we will explore NLP’s role, the challenges, and the advancements in creating natural and contextually aware interactions in chatbots.

In simple word chatbot and conversational agent development, NLP (Natural Language Processing) is a crucial tool that allows these virtual assistants to have conversations that feel like they’re talking to a real person. We’ll take a close look at how NLP makes this possible, what difficulties it faces, and the progress being made to create chatbots that can understand and respond in a natural and context-aware way.

The Role of NLP in Developing Chatbots

Understanding User Input

- NLP helps chatbots understand user messages’ intent, analyzing text to recognize whether users are asking questions, making requests, or expressing complaints.

- NLP identifies specific information pieces in user input, such as names, dates, and locations, which are crucial for providing relevant responses.

- NLP allows chatbots to grasp what users are trying to say. For example, if a user types, “What’s the weather like today?” the chatbot uses NLP to understand it’s a weather-related question.

Generating Responses

- NLP models assist chatbots in generating human-like responses and constructing coherent and contextually appropriate replies using NLG techniques.

- Generating Responses: NLP helps chatbots craft responses that sound like a human wrote them. For instance, if you ask a chatbot for a weather update, it uses NLP to generate a natural-sounding reply like, “Today, it’s sunny with a high of 75°F.”

Context Management

- NLP helps chatbots maintain context across conversations, enabling them to remember previous interactions and make conversations feel more natural.

- NLP can resolve pronoun references (e.g., “he,” “she”) by identifying the entities they refer to, enhancing conversation coherence.

- Context Management: NLP lets chatbots remember what you talked about earlier in the conversation. If you mention “it” in a later message, the chatbot uses NLP to figure out that you’re still talking about the weather.

Multi-Turn Conversations

- NLP-powered chatbots can manage multi-turn conversations, track the dialogue state, recognize when users switch topics and respond accordingly.

- Multi-Turn Conversations: NLP-powered chatbots can handle back-and-forth chats. If you start by asking about the weather and then switch to asking about restaurants, the chatbot understands the change and responds accordingly.

Challenges in Creating Natural and Contextually Aware Interactions

Ambiguity and Understanding Context

- Natural language often has multiple meanings, requiring chatbots to correctly disambiguate user queries.

- Understanding and maintaining context across multiple turns can be challenging, with misinterpreted context leading to irrelevant or confusing responses.

- Natural language can be tricky because words often have multiple meanings. Chatbots need to figure out the right meaning based on the context.

- For example, if someone says, “I saw a bat,” the chatbot needs to know if it’s a baseball bat or a flying mammal.

Handling Complex Queries

- Chatbots encounter diverse and complex user queries, necessitating sophisticated NLP models for accurate responses. This includes understanding user emotions, idiomatic expressions, and sarcasm.

- Chatbots face all sorts of complex questions and statements. They need advanced NLP models to understand things like emotions and sarcasm.

- For instance, if a user says, “Great, just what I needed,” the chatbot must grasp if it’s a positive or negative reaction.

Personalization

- Achieving truly personalized interactions is a challenge, as chatbots must adapt to individual user preferences, relying on effective user profiling and recommendation systems.

- Chatbots aim to give each user a personalized experience. To do this, they use your past interactions and preferences to tailor their responses.

- For example, if you always ask for vegetarian restaurant recommendations, the chatbot will remember and suggest veggie-friendly places.

Handling Out-of-Domain Queries

- Chatbots need to gracefully handle questions or requests outside their pre-defined domain of knowledge, knowing when to decline or escalate to a human agent.

- Sometimes, users ask chatbots questions they don’t know the answers to because it’s not in their area of expertise. Chatbots must know when to say, “I don’t know,” or when to pass the question to a human agent

- For instance, if you ask a weather chatbot about a historical event, it should realize it’s out of its domain and handle it accordingly.

Advancements in Creating Natural and Contextually Aware Interactions

Transformer-Based Models

- Models like GPT-3 and BERT, based on the transformer architecture, have significantly advanced chatbot capabilities. They excel in understanding context, generating coherent responses, and handling diverse language patterns.

- In simple words, models like GPT-3 and BERT are super smart because they’re built on a thing called the transformer. These models are great at understanding what you’re talking about, giving you coherent answers, and handling different ways people talk.

Transfer Learning

- Pre-trained models enable chatbots to leverage knowledge from large datasets, reducing the need for extensive manual data labeling and enhancing their performance through fine-tuning.

- In simple words, Chatbots don’t have to start from scratch. They can use pre-trained models, which are like having a head start in a race. It means they already know a lot and can learn even more without tons of extra work.

Emotion Recognition

- Advancements in sentiment analysis and emotion recognition enable chatbots to understand user emotions, responding empathetically and appropriately.

- In simple words, Chatbots are getting better at knowing how you feel. If you’re happy or sad, they can tell, and they’ll respond in a way that makes sense. For example, if you say you’re sad, the chatbot might say something comforting.

Multi-Modal Interactions

- Chatbots are becoming more versatile by integrating multi-modal capabilities, including text, voice, and images, enhancing the user experience and enabling richer interactions.

- In simple words, Chatbots aren’t just about words anymore. They can handle text, voice, and even pictures. So, you can talk to them in lots of different ways, making the conversation more fun.

Contextual Memory

- Chatbots increasingly incorporate contextual memory mechanisms, allowing them to remember and reference previous parts of the conversation, improving dialogue coherence.

- Imagine if you had to repeat everything you said in a conversation. That wouldn’t be great, right? Chatbots are getting better at remembering what you’ve said before, so you don’t have to repeat yourself.

Hybrid Approaches

- Combining rule-based systems with NLP-driven chatbots addresses specific domain knowledge and provides structured responses.

- In simple words, some chatbots use a mix of rules and NLP smarts. It’s like having a plan and being flexible at the same time. These chatbots are good at some things and know a lot about specific topics.

Ethical Considerations

- There is a growing focus on ethical chatbot design, including transparency, fairness, and responsible AI usage, ensuring chatbots benefit users without causing harm.

- Chatbots need to be nice and fair. There are rules to make sure they treat everyone right and don’t do anything bad. So, when you talk to a chatbot, you know it’s safe and friendly.

NLP is the cornerstone of chatbot development, enabling them to understand, respond, and engage with users naturally. Challenges such as ambiguity, personalization, and context handling persist, but advancements in transformer-based models, transfer learning, emotion recognition, multi-modal interactions, and ethical considerations continually shape chatbot evolution toward contextually aware and natural conversational agents.

Sentiment Analysis

Sentiment analysis, a crucial application of Natural Language Processing (NLP), involves understanding and interpreting emotions expressed in text. It plays a vital role in various domains, including customer feedback analysis, social media monitoring, and market research. That means sentiment analysis is like having a superpower for understanding feelings in written words. With this power, we can figure out how people feel about things like customer reviews, social media posts, and market surveys. It’s really important in lots of areas where knowing what people think and feel helps make things better.

Let’s delve deep into the applications and implications of sentiment analysis

Applications of NLP in Sentiment Analysis

Customer Feedback Analysis

- Overview Sentiment analysis helps businesses analyze customer feedback to gauge customer satisfaction and identify areas for improvement.

- Use Cases Companies can automatically classify customer reviews, social media comments, and survey responses as positive, negative, or neutral. This enables them to address issues promptly and enhance customer experiences.

- For example, a restaurant can use sentiment analysis to see if customers are happy with their food by looking at online reviews. If many reviews say the food is delicious, that’s a positive sentiment. But if reviews say the food is bad, that’s a negative sentiment. The restaurant can then work on improving.

Social Media Monitoring

- Overview Sentiment analysis is widely used to monitor social media platforms for brand mentions, public opinion, and trending topics.

- Use Cases Businesses can track how their brand is perceived in real time, identify emerging trends, and respond to customer concerns promptly. For example, airlines can monitor Twitter to address passenger complaints and issues.

Market Research

- Overview Sentiment analysis helps market researchers gauge consumer sentiment toward products, services, and brands.

- Use Cases By analyzing social media discussions, reviews, and survey responses, businesses can understand consumer preferences, market trends, and competitive landscapes. This informs product development and marketing strategies.

Brand Reputation Management

- Overview Sentiment analysis aids in assessing and managing brand reputation in the digital landscape.

- Use Cases Businesses can identify and respond to negative sentiments quickly to prevent potential crises. They can also track the impact of marketing campaigns and adjust strategies accordingly.

Political Analysis

- Overview Sentiment analysis is applied in politics to gauge public sentiment toward politicians, policies, and political events.

- Use Cases Political analysts and campaigns use sentiment analysis to understand voter sentiment, assess public reactions to speeches and debates, and tailor messaging accordingly.

Stock Market Forecasting

- Overview Sentiment analysis is used in financial markets to analyze news articles, social media posts, and investor sentiment.

- Use Cases Traders and investors leverage sentiment analysis to make informed decisions based on market sentiment. Positive or negative sentiment can influence stock prices and trading strategies.

Implications of Sentiment Analysis

Customer Feedback

- Positive Identifying positive sentiments in customer feedback can highlight areas of excellence, which can be leveraged for marketing and reputation-building.

- Negative Detecting negative sentiments allows businesses to address issues, improve product or service quality, and enhance customer retention.

Social Media Monitoring

- Brand Management Sentiment analysis helps maintain a positive brand image, respond to customer concerns, and mitigate potential PR crises.

- Competitor Analysis Analyzing sentiment toward competitors provides insights for strategic positioning and product development.

Market Research

- Product Development Understanding customer sentiment helps in tailoring products and services to meet consumer needs and preferences.

- Marketing Strategy Sentiment analysis informs marketing strategies by identifying market trends and consumer sentiment toward advertisements.

Brand Reputation Management

- Crisis Management Detecting negative sentiment early allows businesses to address issues and prevent crises.

- Campaign Evaluation Sentiment analysis helps assess the effectiveness of marketing campaigns and adjust strategies accordingly.

Political Analysis

- Campaign Strategy Political campaigns use sentiment analysis to refine messaging, target key demographics, and understand voter sentiment.

- Policy Development Sentiment analysis can inform policy decisions by assessing public sentiment toward specific issues.

Stock Market Forecasting

- Investment Decisions Traders and investors use sentiment analysis to inform investment strategies and assess market sentiment’s impact on stock prices.

sentiment analysis in NLP is a versatile tool with applications spanning customer feedback analysis, social media monitoring, market research, brand reputation management, political analysis, and stock market forecasting. It empowers businesses and organizations to make data-driven decisions, enhance customer experiences, and respond effectively to changing sentiments in various domains.

Language Translation

Natural Language Processing (NLP) significantly influences language translation by enabling the development of machine translation systems that bridge language barriers. This active exploration delves into the importance of NLP in translation and elucidates how machine translation systems function:

- Machine Translation Systems Machine translation automates the conversion of text or speech from one language to another. NLP-based machine translation systems have revolutionized global communication. These systems comprise essential components:

- Text Preprocessing Text preprocessing, encompassing tasks such as tokenization (breaking text into words or phrases), part-of-speech tagging (categorizing words grammatically), and syntactic analysis (parsing sentence structure), precedes translation.

- Challenges in Language Translation

- Human-Aided Translation To surmount these challenges, human-aided translation is frequently employed. In this approach, human translators use machine-generated translations as a starting point and refine them. This amalgamates machine efficiency with human linguistic expertise.

NLP-based machine translation systems have revolutionized global communication by dismantling language barriers. These systems, powered by bilingual corpora, translation models, and deep learning, facilitate efficient translation. Nonetheless, challenges like idiomatic expressions, cultural nuances, and polysemy persist, underscoring the importance of human-aided translation for context-aware, high-quality translations in complex scenarios.

Ethical Consideration

NLP technologies have rapidly advanced, but they also raise significant ethical concerns. Two primary ethical issues are bias in language models and the responsible use of NLP technologies. This deep exploration delves into these concerns

- Bias in Language Models

- Language models are typically trained on vast datasets that contain text from the internet, reflecting the inherent biases present in society. These biases can manifest in various ways.

- a. Gender Bias Language models may exhibit gender bias by associating certain professions, roles, or characteristics with specific genders. For example, they might falsely associate “nurse” with women and “engineer” with men.

- b. Racial and Ethnic Bias Biases based on race or ethnicity can emerge in language models, leading to stereotypes, prejudice, and offensive content.

- c. Socioeconomic Bias Language models can unintentionally reinforce socioeconomic disparities by favoring certain cultural norms or values.

- d. Political Bias Political biases may surface in language models, impacting the way they respond to politically charged topics or individuals.

- e. Offensive Content Language models can generate offensive, harmful, or inappropriate content, reflecting the biases present in their training data.

Ethical concerns in NLP, such as bias in language models and the responsible use of NLP technologies, are critical issues that demand attention. Mitigating bias, ensuring fairness, protecting privacy, and adhering to ethical principles are essential steps in addressing these concerns and promoting the responsible and ethical development and deployment of NLP technologies.

Future Directions and Challenges

Emerging trends and potential future developments in NLP include

- Multilingual and Cross-Lingual Models

- The development of models that can understand and generate text in multiple languages is a growing trend. Multilingual models like mBERT and XLM-R have shown promise in enabling NLP applications across diverse linguistic contexts. Cross-lingual models aim to bridge language barriers, facilitating communication and information access.

- Low-Resource Languages

- Researchers are increasingly focusing on low-resource languages that lack extensive training data. Improving NLP capabilities for these languages is essential for global inclusivity and access to information. Zero-shot and few-shot learning techniques are being explored to adapt models to new languages with limited data.

- Conversational AI

- The development of more human-like conversational agents and chatbots is a prominent trend. These agents aim to provide natural and contextually aware interactions, making them valuable in customer service, virtual assistants, and education. Models like GPT-3 and chatGPT have demonstrated impressive conversational abilities.

- Explainable AI (XAI)

- As NLP models become more complex, the need for model interpretability and transparency is growing. Research into XAI aims to make NLP models more understandable and accountable. Techniques like attention visualization, saliency maps, and rule-based explanations are emerging to shed light on model decisions.

- Ethical AI

- Addressing ethical concerns in NLP is a pressing trend. Researchers and practitioners are working on reducing bias in language models, ensuring fairness in applications, and implementing responsible AI practices. Efforts include bias mitigation techniques, ethical guidelines, and regulations to govern NLP technologies.

- Domain-Specific NLP

- Tailoring NLP models for specific domains like healthcare, legal, and finance is gaining traction. Specialized models and fine-tuning techniques are being developed to improve accuracy and applicability in these areas.

- Multimodal AI

- Integrating text with other modalities like images, audio, and video is an emerging trend. Multimodal models enable NLP systems to understand and generate content based on multiple data sources, enhancing applications in content generation, sentiment analysis, and more.

- Transfer Learning

- Transfer learning, where pre-trained models are fine-tuned for specific tasks, is expected to continue to advance. Models like BERT and GPT have paved the way for this approach, making it easier to develop NLP applications with limited labeled data.

Researchers and practitioners in NLP face several challenges, including

- Model Interpretability

- Increasing model complexity poses challenges for understanding why models make specific predictions. Achieving better interpretability is crucial, especially in applications like healthcare and finance, where decisions have significant consequences.

- Bias and Fairness

- Bias in training data and models can perpetuate societal biases. Mitigating bias and ensuring fairness in NLP applications remains a complex challenge. Researchers are exploring debiasing techniques and fairness metrics.

- Data Privacy

- NLP models often handle sensitive user data. Ensuring data privacy and complying with regulations like GDPR is a continuous challenge. Techniques like federated learning and secure multi-party computation are being explored.

- Resource Intensiveness

- Large-scale pre-trained models demand substantial computational resources, making them inaccessible for many researchers and organizations. Developing lightweight and efficient models is a research priority.

- Evaluation Metrics

- Existing evaluation metrics for NLP tasks may not always capture the nuances of model performance. Developing more informative and context-aware evaluation metrics is essential for meaningful assessments.

- Scaling Up

- As NLP models continue to grow in size, scaling up infrastructure to support these models becomes challenging. Efficient model parallelism and distributed training are areas of active research.

- Adaptation to Specific Domains

- Adapting general-purpose NLP models to domain-specific tasks can be challenging, as they may not have sufficient domain-specific knowledge. Fine-tuning approaches need to be improved.

- Robustness and Security

- Ensuring the robustness of NLP models against adversarial attacks and maintaining the security of deployed systems are ongoing concerns, especially in applications like cybersecurity and authentication.

Addressing these challenges and capitalizing on emerging trends will be crucial for the responsible and effective development and deployment of NLP technologies in the future.

Natural Language Processing (NLP) has enabled innovative applications that streamline processes, enhance customer experiences, and extract valuable insights from unstructured text data, making a significant impact across various industries.

Here, we present real-world examples of successful NLP applications in different domains

Healthcare

- a. NLP-powered tools analyze medical notes and transcripts to identify discrepancies and improve the accuracy of clinical documentation, reducing errors, ensuring proper coding, and optimizing reimbursement for healthcare providers.

- b. NLP models analyze electronic health records (EHRs) and patient histories to predict disease outbreaks, identify potential health risks, and assist in early diagnosis, such as predicting flu outbreaks based on social media data.

- c. Healthcare chatbots powered by NLP offer personalized responses to patient inquiries, schedule appointments, provide medication reminders, and offer telehealth services, improving patient engagement and access to care.

Customer Service and Support

- a. Businesses use NLP-driven chatbots and virtual assistants to provide 24/7 customer support, answer queries, troubleshoot issues, and guide customers through processes, reducing response times and improving customer satisfaction.

- b. NLP is used to analyze customer feedback, reviews, and social media comments to gauge sentiment and identify trends, allowing companies to gain insights into customer opinions, identify areas for improvement, and adapt their strategies accordingly.

Finance

- a. NLP assists financial institutions in processing news articles, earnings reports, and social media sentiment to make informed trading decisions. This understanding of market sentiment in real-time proves valuable for traders.

- b. NLP models analyze vast amounts of unstructured financial data to assess credit risk, detect fraudulent activities, and monitor compliance with regulatory requirements.

- c. NLP-powered tools extract key insights and summaries from financial news articles, enabling traders and analysts to quickly access relevant information.

Legal

- a. NLP applications review and extract relevant clauses, terms, and obligations from legal contracts, enhancing contract management and due diligence processes.

- b. NLP-powered search engines for legal documents provide lawyers and legal professionals with faster and more accurate access to case law, statutes, and legal literature.

E-commerce and Retail

- a. NLP models analyze customer behavior, reviews, and product descriptions to provide personalized product recommendations, thereby increasing conversion rates and customer satisfaction.

- b. E-commerce platforms use NLP to analyze product reviews and feedback, helping businesses identify quality issues, understand customer preferences, and improve products.

Social Media and Marketing

- a. Brands employ NLP to track mentions, sentiments, and trends on social media platforms. This data helps companies respond to customer inquiries, manage their online reputation, and refine marketing strategies.

- b. NLP-powered tools generate marketing content, including product descriptions, advertisements, and social media posts, allowing businesses to automate content creation tasks.

Education

- a. NLP-based grading systems assess written assignments and exams, providing faster feedback to students and saving educators time.

- b. Language learning apps leverage NLP for speech recognition, translation, and language proficiency assessments.

Conclusion

NLP is revolutionizing our daily lives, enhancing communication with machines, and opening new possibilities. Its usefulness is evident in virtual assistants, language translation, and more, streamlining tasks and improving accessibility. Despite its benefits, challenges like bias and ethical concerns must be addressed. Looking ahead, the future of NLP holds promise in overcoming these hurdles, unlocking even greater potential for innovation, and transforming the way we interact with technology.