The Rise of Quantum Computing: What You Need to Know

We are all occupied with the bunch of Technology consisting of many demanding upcoming data trends and industries and we all know the basics of all the technologies and trends and their applications.

But do we all know what technology can do for companies and the problems they may face using it is critical for adoption.

The journey begins with a discussion of what quantum computing means for both IT workers and groups and individuals.

In todays blog I will cover the following contents mentioned below:

- History of Quantum Computing

- Quantum Computing: What It Is, Why We Want It, and How We’re Trying to Get It

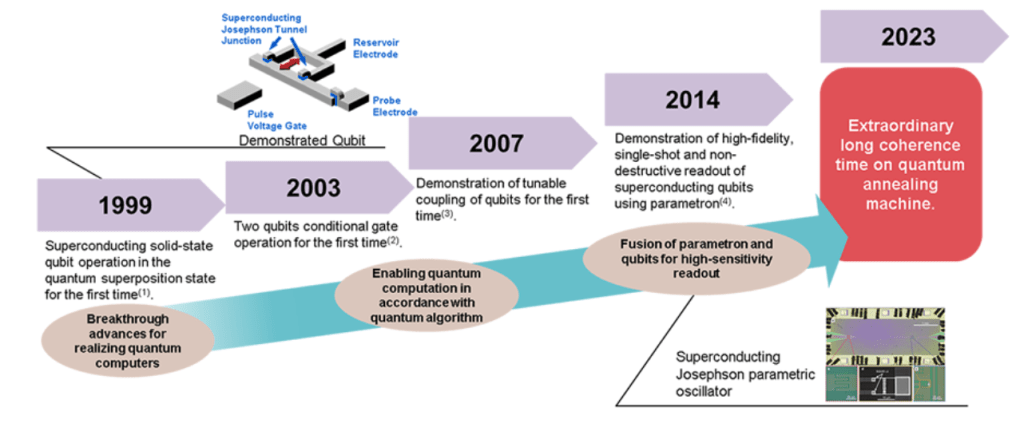

History of Quantum Computing:

Quantum mechanics emerged as a branch of physics in the early 1900s to explain nature on the scale of atoms and led to advances such as transistors, lasers, and magnetic resonance imaging.

-Merging of quantum mechanics and information theory :

The idea to merge quantum mechanics and information theory arose in the 1970s but garnered little attention until 1982, when physicist Richard Feynman gave a talk in which he reasoned that computing based on classical logic could not tractably process calculations describing quantum phenomena. Computing based on quantum phenomena configured to simulate other quantum phenomena, however, would not be subject to the same bottlenecks. Although this application eventually became the field of quantum simulation, it didn’t spark much research activity at the time.

In 1994, however, interest in quantum computing rose dramatically when mathematician Peter Shor developed a quantum algorithm, which could find the prime factors of large numbers efficiently.

Here, “efficiently” means in a time of practical relevance, which is beyond the capability of state-of-the-art classical algorithms. Although this may seem simply like an oddity, it is impossible to overstate the importance of Shor’s insight. The security of nearly every online transaction today relies on an RSA cryptosystem that hinges on the intractability of the factoring problem to classical algorithms.

What is Quantum computing:

Quantum computing is a type of computing that uses quantum-mechanical phenomena, such as superposition and entanglement, to perform operations on data. Unlike traditional computing, which uses binary digits or bits (0s and 1s) to represent and process data, quantum computing uses quantum bits or qubits, which can exist in multiple states simultaneously. This allows for a level of processing power and complexity that is impossible with traditional computing. Quantum computing has the potential to solve complex optimization problems much more quickly and efficiently than traditional computing, making it a valuable tool for fields such as finance, logistics, and transportation. It also offers the potential for unbreakable encryption, making it a valuable tool for secure communication and data storage. However, quantum computing is still in its early stages of development, and there are many technical challenges that need to be overcome before it can reach its full potential.

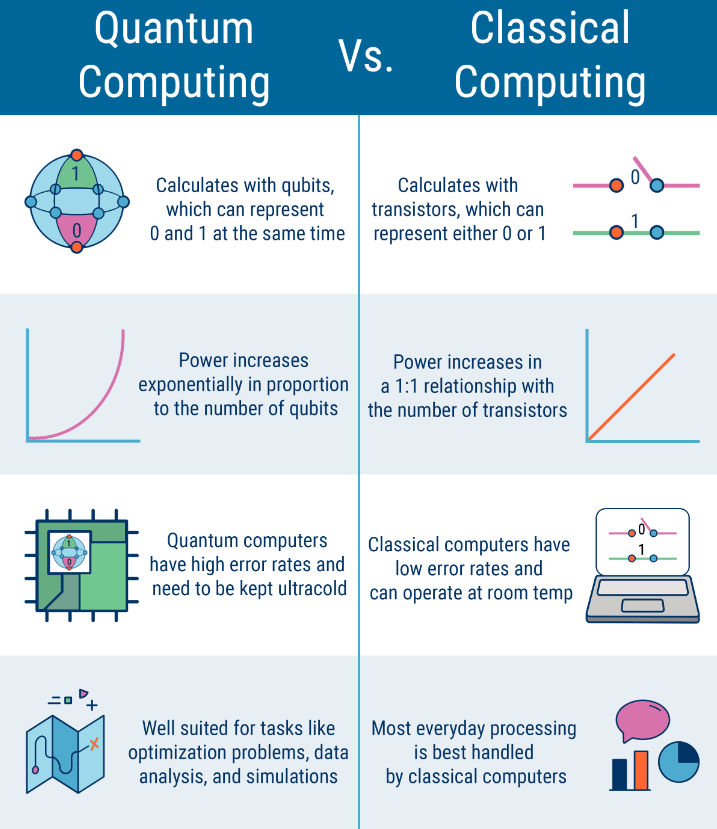

Quantum Computing Vs. Classical Computing:

Quantum computing and classical computing are two fundamentally different approaches to computing that operate on different principles. Here are some of the key differences between the two:

- Bits vs. qubits: Classical computers operate on bits, which are binary digits that can be either 0 or 1. Quantum computers, on the other hand, operate on qubits, which are quantum bits that can exist in multiple states simultaneously. This allows quantum computers to perform certain calculations much faster than classical computers.

- Superposition and entanglement: In quantum computing, qubits can exist in a state of superposition, which means they can be both 0 and 1 at the same time. Additionally, qubits can be entangled, which means that the state of one qubit can affect the state of another qubit, regardless of their distance from each other. These quantum phenomena allow quantum computers to perform certain calculations exponentially faster than classical computers.

- Error correction: Classical computers use error correction to ensure that their calculations are accurate. In quantum computing, however, error correction is much more difficult because the state of a qubit can be affected by its environment. This is one of the biggest challenges in developing practical quantum computers.

- Applications: While classical computers are well-suited for tasks like word processing, spreadsheets, and internet browsing, quantum computers are better suited for tasks like cryptography, optimization, and simulating complex systems.

Why we use quantum computing?

Quantum computing is used because it has the potential to solve problems that classical computers are unable to solve efficiently. Here are some of the key reasons why we use quantum computing:

Faster computation:

Quantum computers can perform certain calculations much faster than classical computers. This is because quantum computers use qubits, which can exist in multiple states simultaneously, allowing them to perform certain calculations exponentially faster than classical computers.

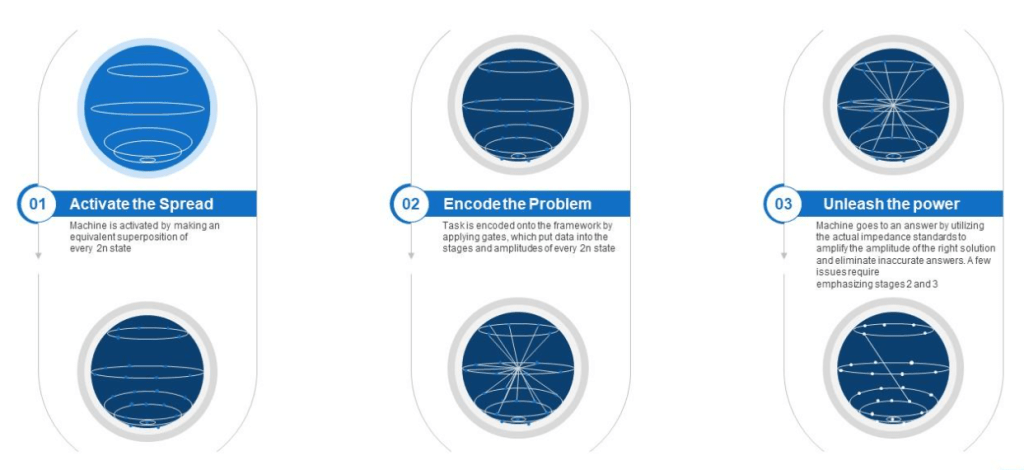

This slide depicts the mechanism of the quantum computers that made them faster than classic computers and how the problem is encoded in quantum computers.

- Introducing Why Are Quantum Computers Faster Than Classic Computers Quantum Computation to increase your presentation threshold.

- We are with three stages, this image is a great option to educate and entice the audience. Dispence information on the following parameters given below:

- Activate The Spread, Encode The Problem, Unleash The Power, using this template. Grab it now to reap its full benefits.

Solving complex problems:

Quantum computing is well-suited for solving complex problems that are difficult or impossible to solve with classical computers. For example, quantum computers can be used for simulating complex chemical reactions, optimizing complex systems, and breaking modern cryptography.

Developing new materials:

Quantum computing can be used to simulate the behavior of materials at the quantum level. This allows scientists to develop new materials with unique properties that could have important applications in areas like energy storage and electronics.

Improving machine learning:

Quantum computing can be used to improve machine learning algorithms. For example, quantum computers can be used to train neural networks more efficiently, allowing them to learn from larger datasets and make more accurate predictions.

HOW ARE WE TRYING TO GET QUANTUM COMPUTING?

Quantum computing is a rapidly evolving field, and there are several approaches being pursued to build practical quantum computers. Here are a few of the most promising ones:

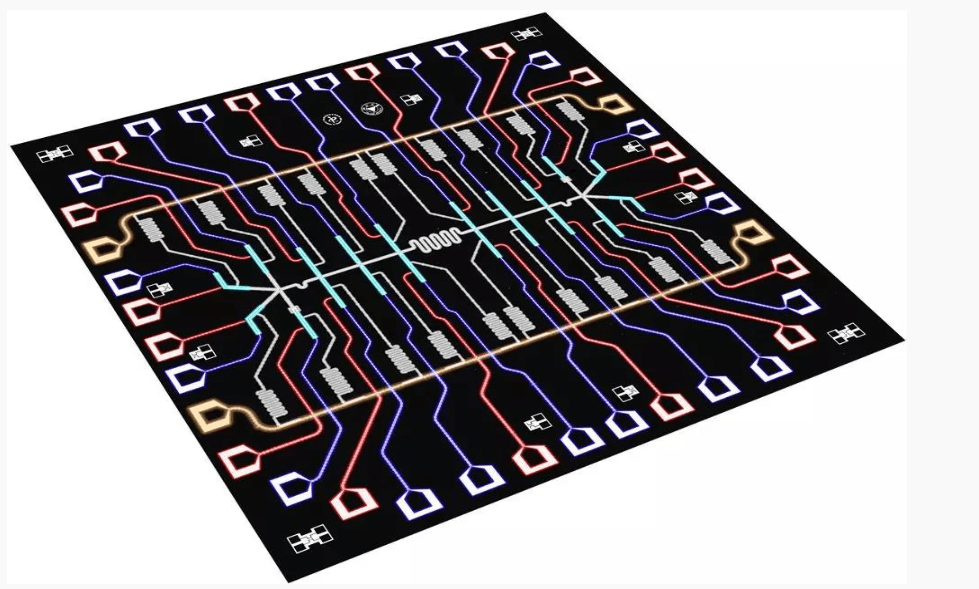

- Superconducting qubits: This is currently the most advanced and widely used approach. In this approach, qubits are created using superconducting circuits, which are cooled to extremely low temperatures to reduce decoherence and allow quantum operations to be performed. Several companies such as IBM, Google, and Rigetti are actively developing superconducting quantum computers.

I am attaching a link for the reference of ‘Anatomy of Superconducting Qubit’. Please go through the same because it will take you to the detailed network architecture of “superconducting qubits‘.

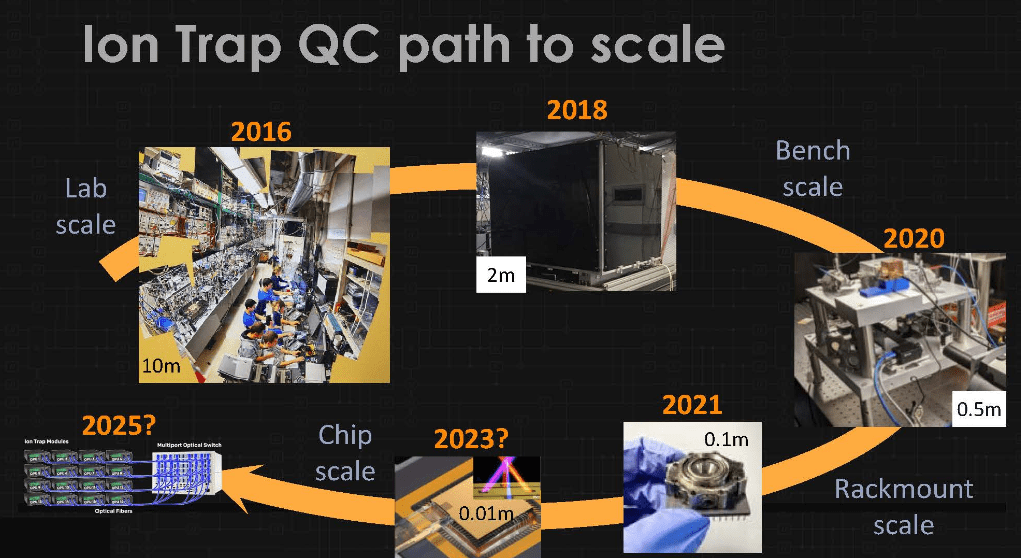

- Ion trap quantum computers: In this approach, individual ions are trapped and manipulated using electromagnetic fields to form qubits. Ion trap quantum computers have demonstrated high levels of qubit coherence, but are currently limited by scalability.

See how technically and beautifully ‘Quantum Computing’ actually evolved from history till today during its journey from 2016 till today(2025) in ‘Ion trap quantum computers’.

- Photonic quantum computers: This approach uses photons (particles of light) to create and manipulate qubits. Photonic quantum computers are promising because they can potentially be integrated with existing fiber-optic communication networks. However, they currently suffer from low success rates and scalability.

- Topological qubits: This is a relatively new approach that uses topological properties of materials to create qubits that are more robust against decoherence. Microsoft is one of the leading companies pursuing this approach.

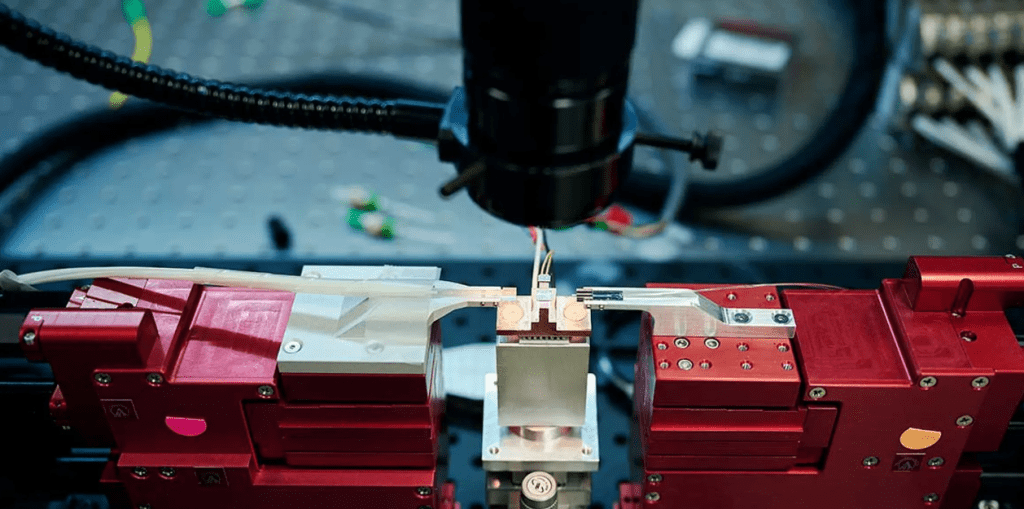

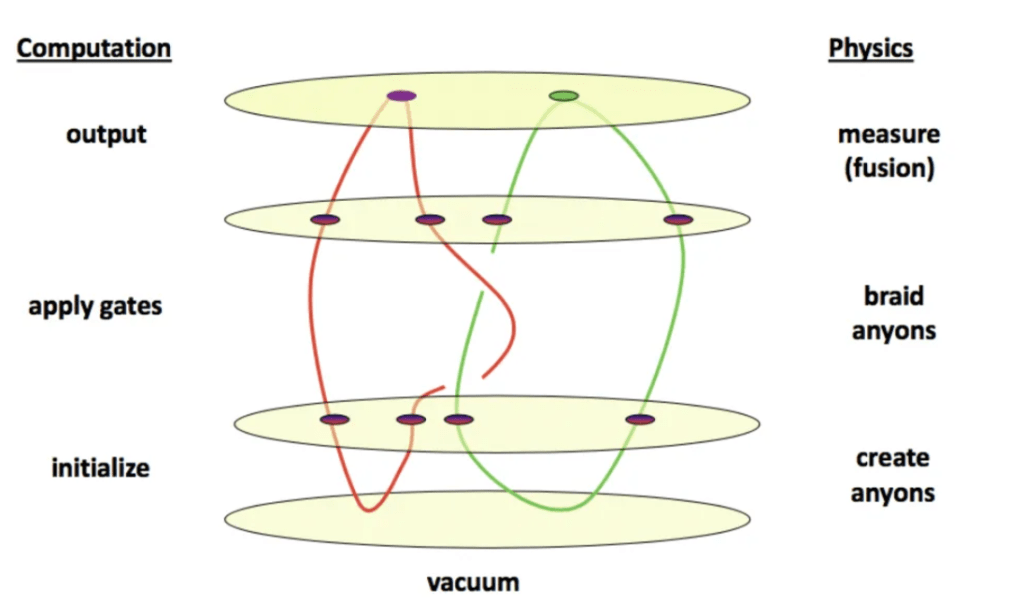

Now I will show and explain how we can actually make “topological qubits” (real science, where all the physics models and techniques will be used).

Get ready to explore your old school days when we used to do experiments in our physics labs.

To make topological qubits using quantum computing, we need to implement a physical system that exhibits topological properties. One approach is to use topological superconductors, which are materials that can host Majorana fermions, a type of quasiparticle that behaves as its own antiparticle and is predicted to be useful for topological quantum computing. Here are some general steps for making topological qubits using this approach:

Fabricate a topological superconductor:

Create a material that has the necessary topological properties, such as a one-dimensional nanowire made of a semiconductor material coupled to a superconductor.

Create a Josephson junction:

This junction requires the transfer of Cooper pairs of electrons because ‘Josephson junction’ is a weak link between two superconductors that allows for the transfer of Cooper pairs of electrons. This can be done by creating a constriction in the nanowire or using a superconducting electrode.

Form a Majorana fermion:

The Josephson junction creates a region where the superconducting pairing potential vanishes, which can trap a Majorana fermion. This can be achieved by controlling the magnetic field or the gate voltage applied to the nanowire.

Encode a qubit:

Two Majorana fermions can be used to encode a single qubit, with the qubit states corresponding to the presence or absence of a pair of Majorana fermions at the ends of the nanowire.

Perform quantum operations:

Quantum operations such as single-qubit rotations and two-qubit gates can be performed by manipulating the magnetic field or gate voltage applied to the nanowire.

Read out the qubit:

The state of the qubit can be read out by measuring the conductance of the nanowire, which is sensitive to the presence or absence of the Majorana fermions.

Final Intake:

In conclusion, the rise of quantum computing is a major development in the world of IT, with the potential to revolutionize the way we process and analyze data. While there are still challenges to overcome, the promise of unbreakable encryption and faster optimization makes quantum computing an exciting field to watch in the coming years.

Add Comment

You must be logged in to post a comment.