Multi-tenant Architecture for Airflow Deployment

Introduction to Airflow

Airflow is an open-source workflow management platform for data engineering pipelines. It started at Airbnb in October 2014 as a solution to manage the company’s increasingly complex workflows. Creating Airflow allowed Airbnb to programmatically author and schedule their workflows and monitor them via the built-in Airflow user interface. From the beginning, the project was made open source, becoming an Apache Incubator project in March 2016 and a top-level Apache Software Foundation project in January 2019.[1] In this post we are going to overview a multi-tenancy based Architecture for Airflow Deployment on AWS.

What is multi-tenancy?

In a multi-tenant architecture multiple instances of an application operate in a shared environment (Like Dev, Test, Prod). This architecture is able to work because each tenant is integrated physically, but logically separated; meaning that a single instance of the software will run on one server and then serve multiple tenants.[2]

Motivation

Real Solution architects have to go through one of the most tedious jobs. That is deciding on multi-tenant architecture for any application. This involves looking at as many of the existing architectures. Then to come up with a next version which is much better than its predecessors. Airflow being new advancement in data engineering field many of us struggle initially to decide on best architecture. Recently have worked on architecture suitable for multi tenant Airflow deployment on Amazon Web Services. I felt this needs to be shared with widest community.

Development Requirements

First and only requirement is to have a simple Airflow Deployment on AWS which should support multi-tenancy. The DAG codes should be synced across all the environments to avoid any discrepancies.

Architecture for Airflow Deployment

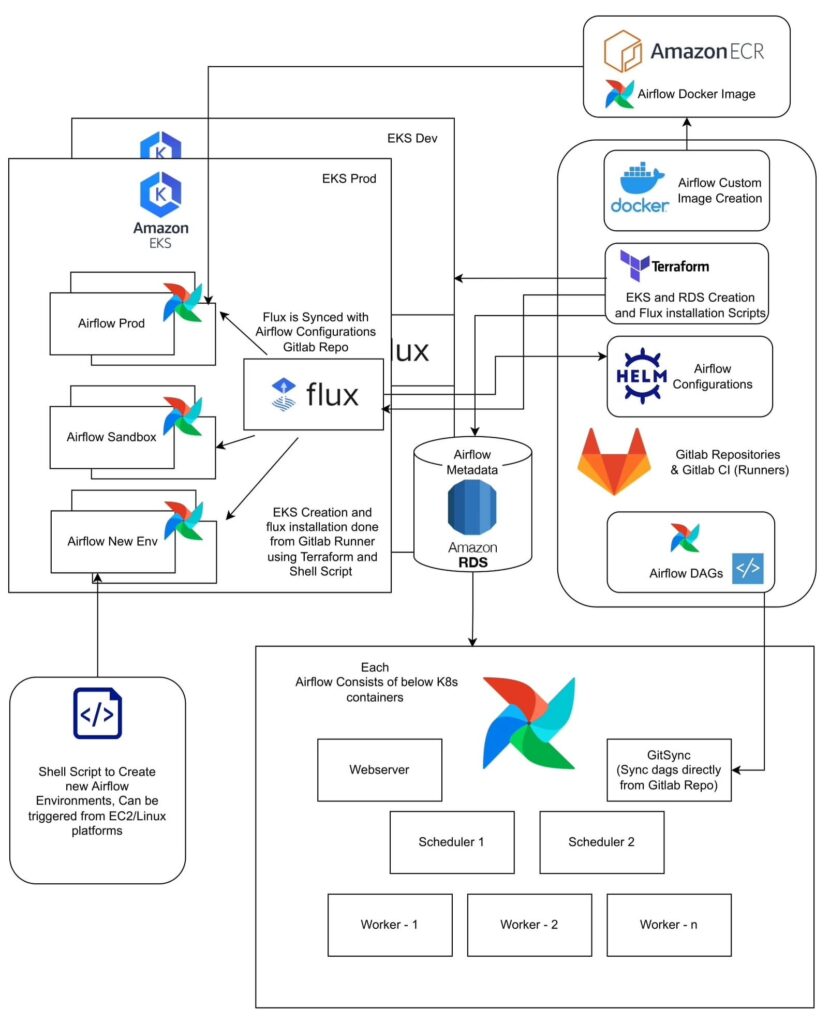

Lets propose this architecture for Airflow deployment, Which involves creation of GitLab CI pipeline which would build Docker images of Airflow components (Web server, Meta Database, Scheduler, Worker) with required python libraries, any dependencies and extra providers that are needed by Airflow. Then these images are pushed into AWS ECR (Elastic Cloud Registry) using terraform scripts that run from GitLab CI.

After this images are then pulled from ECR and Airflow components are created using & flux on EKS (Elastic Kubernetes Service). Last we create various Airflow environments (Dev, Prod, Stage, Sandbox) as needed using Shell scripts which can be triggered from EC2/Linux platforms (these scripts can be kept on different Git Branches for convenience).

DAGs are created to execute jobs on Snowflake data. All DAGs kept inside dags folder and are Git synced to all available environments.

Postscript/What next?

Out of nowhere I felt this is one of the great Architectures for Airflow Deployment which I used recently. Have struggled while choosing the best solution for my problem I thought of sharing it with our community for their help. Finally putting a disclaimer that this is just a suggestion among many architecture that might be readily available for Apache Airflow deployment. I’m not endorsing anything here in any way, users please make their choices cautiously.

With this I conclude this article, hope this helps in your data engineering journey. For any doubts about this post connect with me on LinkedIn, For all software development requirements contact our sales team at StatusNeo. Happy coding.