“Conversational AI Redefined: Harnessing Azure OpenAI to Develop a Custom ChatGPT”

Introduction: In today’s digital era, the ability to understand and generate natural language has become crucial across various industries. Azure OpenAI, a groundbreaking platform, has emerged as a leader in the field of natural language processing (NLP) and artificial intelligence (AI). Leveraging cutting-edge technologies and advanced models, Azure OpenAI empowers developers, researchers, and businesses to solve complex problems, create innovative applications, and unlock new possibilities in the realm of language understanding and generation.

Unleashing the Potential of NLP with Generative AI: Azure OpenAI provides access to a wide range of models that are specifically designed to understand and generate natural language. Its flagship GPT-3 and GPT-4 models are renowned for their capabilities in comprehending and generating human-like text. These models can be employed in diverse applications, including chatbots, content generation, language translation, summarisation, sentiment analysis, and much more. With GPT-4’s enhanced accuracy and GPT-3’s turbocharged chat functionality, Azure OpenAI is continuously pushing the boundaries of what is possible with NLP.

Revolutionising Code Generation: In addition to its expertise in NLP, Azure OpenAI also offers Codex models that excel in understanding and generating code. Trained on a vast dataset of public code repositories, these models possess a profound understanding of programming languages such as Python, JavaScript, C#, and SQL. By leveraging Codex, developers can expedite their coding tasks, generate code snippets, perform code completion, and even facilitate code search and relevance. This integration of NLP and code generation empowers developers to streamline their coding workflows and accelerate software development processes.

Powerful Embeddings: Azure OpenAI’s Embeddings models play a pivotal role in extracting and representing the semantic meaning of text. These models offer functionality for similarity, text search, and code search. By utilising embeddings, developers can cluster similar documents, perform regression analysis, detect anomalies, and enhance search relevance. Furthermore, the recently introduced text-embedding-ada-002 model provides improved weights and an updated token limit, allowing for even more accurate and efficient embedding operations.

Azure Open AI Model Families Available to Develop Custom Generative AI based Solutions:

GPT-4: A set of models that improve on GPT-3.5 and can understand as well as generate natural language and code.

GPT-3: A series of models that can understand and generate natural language. This includes the new ChatGPT model.

Codex: A series of models that can understand and generate code, including translating natural language to code.

Embeddings: A set of models that can understand and use embeddings, which are information-dense representations of the semantic meaning of text. There are three families of Embeddings models: similarity, text search, and code search.

Model Capabilities:

GPT-4 models: Currently available models include gpt-4 and gpt-4-32k. The latter supports a higher maximum input token limit.

GPT-3 models: The models in this family, in order of capability from highest to lowest, are text-davinci-003, text-curie-001, text-babbage-001, and text-ada-001.

ChatGPT (gpt-35-turbo): This model is designed for conversational interfaces and is used differently than previous GPT-3 models.

Codex models: The models in this family are code-davinci-002 and code-cushman-001.

Embeddings models: There are models available for similarity, text search, and code search. The capabilities and dimensions of these models vary.

Naming Convention: Model names follow the convention: {capability}-{family}[-{input-type}]-{identifier}, where capability represents the model’s capability, family indicates the relative family of the model, input-type (for Embeddings models only) indicates the type of embedding supported, and identifier represents the version of the model.

Model Summary and Region Availability:

GPT-3 Models: Various GPT-3 models are available in different regions, with different maximum request tokens and training data availability.

GPT-4 Models: Currently, access to gpt-4 and gpt-4-32k is only available by request in specific regions.

Codex Models: Code-cushman-001 is currently unavailable for fine-tuning. The other available model is code-davinci-002.

Embeddings Models: Different models are available for similarity, text search, and code search, with variations in capability, maximum request tokens, and training data availability.

Here are several key parameters that can be adjusted to fine-tune the performance of your generative AI model in Azure OPEN AI Studio. These parameters allow you to control aspects such as randomness, response length, stopping criteria, repetition prevention, and guiding the model’s behaviour. Here is a comprehensive overview of these parameters:

Temperature: The temperature parameter controls the randomness of the model’s responses. A lower temperature value, such as 0.2, makes the model more focused and deterministic, resulting in more repetitive responses. Conversely, a higher temperature value, like 0.8, increases the randomness, leading to more diverse and creative responses.

Max Length (Tokens): To limit the length of the model’s response, you can set a maximum number of tokens. The API supports a maximum of 4000 tokens, including both the prompt (system message, examples, message history, and user query) and the model’s response. Tokens can be roughly equated to four characters for typical English text.

Stop Sequences: By specifying stop sequences, you can control where the model should stop generating tokens in its response. For example, you can set a stop sequence to end the response at the completion of a sentence or a specific list. Up to four stop sequences can be defined, and the generated text will not include these stop sequences.

Top Probabilities (Top P): Top P, also known as nucleus sampling, is an alternative method to control randomness in the model’s responses. By adjusting the Top P value, you can control the diversity of token selection. Lowering the Top P narrows the model’s choices to more likely tokens, while increasing it allows the model to consider tokens with both high and low likelihood.

Frequency Penalty: The frequency penalty parameter reduces the chance of the model repeating tokens that have appeared frequently in the text generated so far. By applying a frequency penalty, you can decrease the likelihood of the model producing repetitive text in its responses.

Presence Penalty: Similar to the frequency penalty, the presence penalty parameter reduces the chance of the model repeating any token that has appeared in the generated text at all. Applying a presence penalty encourages the model to introduce new topics or information in its responses.

Pre-response Text: To provide additional context or guide the model’s behaviour, you can insert pre-response text after the user’s input but before the model’s response. This allows you to set the stage or provide specific instructions before the model generates its response.

Post-response Text: Similarly, post-response text can be added after the model’s generated response. This text can encourage further user input or direct the conversation in a specific direction. By providing post-response text, you can guide the user’s next query or prompt them to continue the conversation.

By adjusting these parameters effectively, you can fine-tune the performance and behaviour of your generative AI model in Azure OPEN AI Studio. Experimenting with different parameter settings and finding the right balance allows you to generate more coherent, contextually relevant, and engaging responses from the model.

Prompt engineering is a crucial aspect of working with language models like GPT-3.5 in Azure OPEN AI Studio. By carefully crafting prompts, developers can influence the output and behaviour of the model. Here are some techniques for effective prompt engineering:

Clear and Specific Prompts: When formulating prompts, it’s important to be clear and specific about the desired task or context. Providing explicit instructions or examples helps guide the model’s generation. By clearly defining the input, you can ensure that the output aligns with your intentions.

Contextual Prompts: To leverage the contextual understanding of the model, include relevant information in the prompt. By providing context, such as a few preceding sentences, the model can generate responses that are more coherent and relevant to the given context. This technique is particularly useful for generating continuations of text or engaging in conversational interactions.

Controlling Output Length: Controlling the length of the generated output is essential to ensure concise and meaningful responses. You can achieve this by setting a maximum token limit in the prompt or by specifying the desired length explicitly. This helps avoid excessively long or verbose responses, especially when working with limited text input fields or character constraints.

Temperature and Top-K Sampling: GPT-3.5 models utilize a temperature parameter during the sampling process, which affects the randomness of the generated output. Higher temperature values (e.g., 0.8) produce more diverse and creative responses, while lower values (e.g., 0.2) result in more focused and deterministic outputs. Similarly, top-k sampling limits the selection of next tokens to the top-k most likely options, promoting more controlled and coherent responses.

System Prompts and User Instructions: In certain scenarios, you can benefit from using system-level prompts that guide the model’s behaviour. For example, you can include a system instruction at the beginning of the prompt, such as “You are an assistant helping a user with their question.” This instruction can set the context for the model and provide guidance on how it should approach the task.

Answering Questions Effectively: When using the model to answer questions, it can be helpful to include important details in the prompt, such as specifying the domain or asking for a concise response. Additionally, using system-level instructions, like “Please answer the following question,” can guide the model to provide more focused and relevant answers.

Conditioning and Bias Mitigation: To address potential biases in generative AI outputs, you can condition the model by explicitly specifying desired attributes. For example, if generating a news article, you can mention the importance of neutrality and avoiding controversial opinions. Conditioning prompts can help mitigate biases and ensure more objective and unbiased responses.

Iterative Refinement: Prompt engineering often involves an iterative process of refinement and experimentation. By testing different prompts, instructions, and techniques, you can observe the model’s behaviour and make adjustments accordingly. Iterative refinement allows for optimising the output quality and aligning it with the desired results.

Remember, prompt engineering is an ongoing process that requires experimentation and fine-tuning to achieve the desired outputs. By understanding the nuances of prompt construction and employing these techniques effectively, developers can harness the full potential of generative AI models like GPT-3.5 in Azure OPEN AI.

Azure OpenAI models offer a wide range of applications in the business industry, revolutionizing various processes and enhancing productivity. Here are 20 applications where Azure OpenAI models can be leveraged:

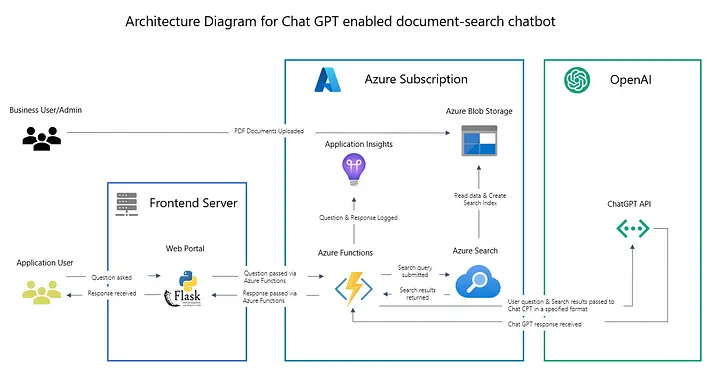

Customer Support Chatbots: Deploy chatbots powered by GPT-3 to provide instant and accurate responses to customer queries, improving customer satisfaction and reducing response times.

Content Generation: Use GPT-3 or GPT-4 to automatically generate engaging blog posts, social media content, product descriptions, and other textual content, saving time and effort for content creators.

Language Translation: Employ Azure OpenAI models to develop advanced language translation systems that can translate text between multiple languages with high accuracy.

Sentiment Analysis: Analyse customer feedback, social media posts, and online reviews using sentiment analysis models to gain insights into customer sentiment and make data-driven business decisions. Virtual Assistants: Build intelligent virtual assistants that can understand natural language and perform tasks such as scheduling appointments, managing calendars, and providing personalised recommendations.

Resume Screening: Use OpenAI models to automate the screening of resumes, matching job requirements with candidate qualifications and selecting the most suitable candidates for further evaluation.

Fraud Detection: Develop fraud detection systems that can analyze large volumes of transactional data, identify patterns, and flag potential fraudulent activities in real-time.

Market Research: Utilize OpenAI models to automate market research tasks, such as collecting and analyzing data from various sources, providing valuable insights for decision-making.

Data Summarization: Use OpenAI models to automatically summarize large volumes of text, saving time and effort in reading and extracting key information from lengthy documents.

Personalized Recommendations: Leverage Azure OpenAI models to develop recommendation systems that provide personalized product recommendations based on customer preferences and behavior.

Chat-based Sales Support: Enable chat-based sales support systems that can understand customer requirements and provide real-time product recommendations, increasing sales conversion rates.

Content Curation: Develop intelligent content curation systems that can analyze user preferences, search trends, and social media discussions to curate and recommend relevant content to users.

Conversational Interfaces for E-commerce: Create conversational interfaces that allow customers to interact with e-commerce platforms using natural language, assisting with product search, recommendations, and purchases.

Compliance Monitoring: Utilize OpenAI models to monitor and analyze compliance-related documents, identifying potential risks and ensuring adherence to regulatory requirements.

Voice Assistants for Call Centers: Develop voice-based assistants powered by OpenAI models to handle customer calls, understand inquiries, and provide accurate responses, reducing call waiting times.

Conclusion: Azure OpenAI has revolutionised the field of natural language processing, enabling businesses and developers to harness the power of AI in understanding and generating human-like text. With advanced models like GPT-3, GPT-4, and Codex, coupled with powerful embeddings, Azure OpenAI provides a comprehensive ecosystem for solving complex language-related challenges and creating innovative applications. By leveraging the capabilities of Azure OpenAI, organisation’s can unlock new opportunities, streamline processes, and provide exceptional experiences through the power of natural language understanding and generation.

Add Comment

You must be logged in to post a comment.