Improving Retrieval Augmented Generation: Self Querying Retrieval

Notes: For more articles on Generative AI, LLMs, RAG etc, one can visit the “Generative AI Series.”

For such library of articles on Medium by me, one can check: <GENERATIVE AI READLIST>

I have been trying to go a little deep into how LangChain does behind the scene. I can see that for creating Enterprise level chatbots, we will need much more sophisticated ways of dealing with LangChain elements with our own customizations. The following is a shallow dig into an important Retrieval: Self Querying Retrieval, which can be utilized to analyze excel documents, financial data, and what not.

First things first. What is the issue?

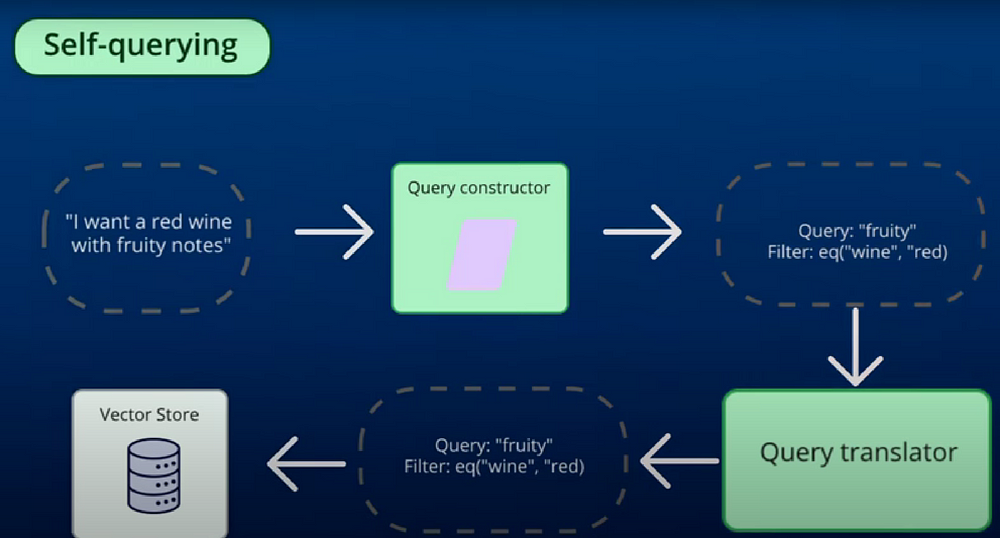

It’s simple. We cannot depend on semantic search for every retrieval task. Semantic search makes sense only when we are after meaning and intent of words.

But in case we are doing a search on things that are in the database. Like we might want just want to perfom a look up task, using Semantic search is illogical. It can even give inaccurate results, and uses unnecessary computing power.

This issue is generally seen when dealing with excel files or financial data. For those cases, LangChain provides a utility called Self Querying Retrieval.

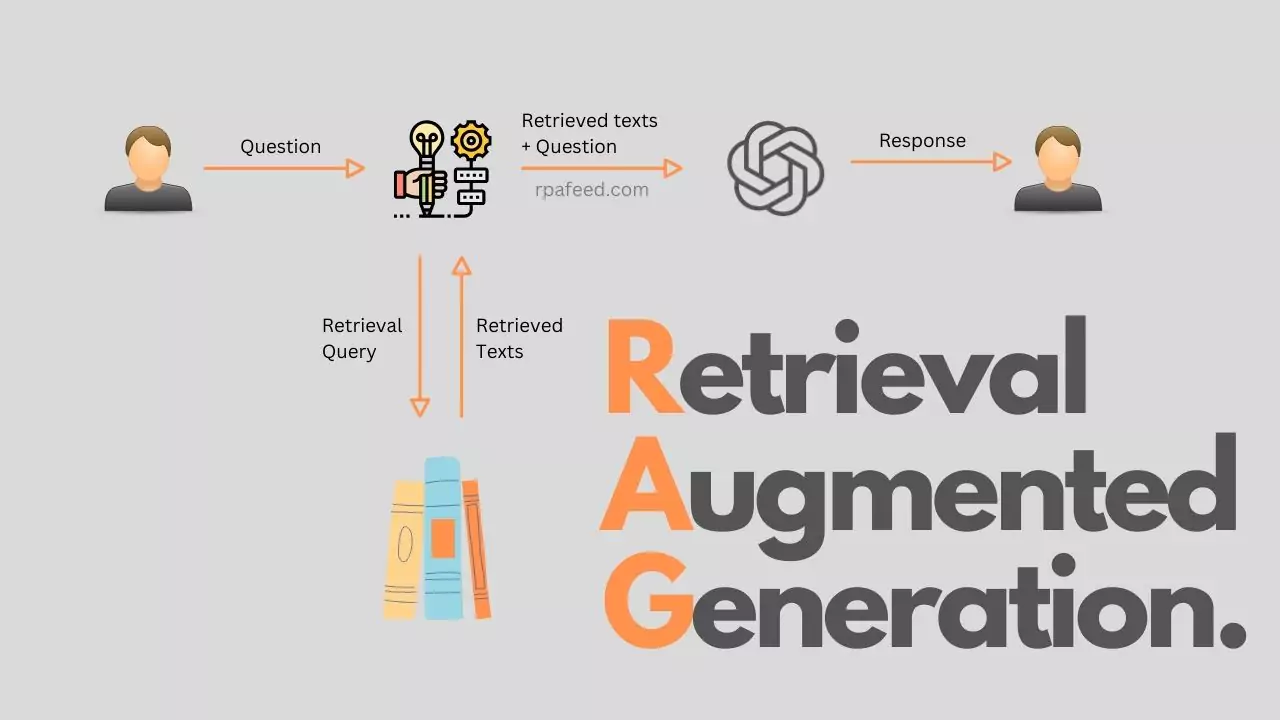

What we do is simple. We insert a ‘step’ between the retrieval and the input. So,

- the person types in the query

- we use a language model to reformat the query to get semantic elements of the query

- we also convert the query so we can do searches on metadata.

To reiterate, the idea is rather simple. If we are looking for a movie, and we want to specify the year, we will go to the vector store. We will just do a look up that looks at the year and filters the result back on that year.

Now that our fundamentals are clear, let’s undestand how LangChain helps us implement self-querying.

We use the “Self Query Retriever”. The “Self Query” retriever allows us to use LangChain to query a vector database. Let’s take a look at how this self-query retriever is implemented, which is what I was after.

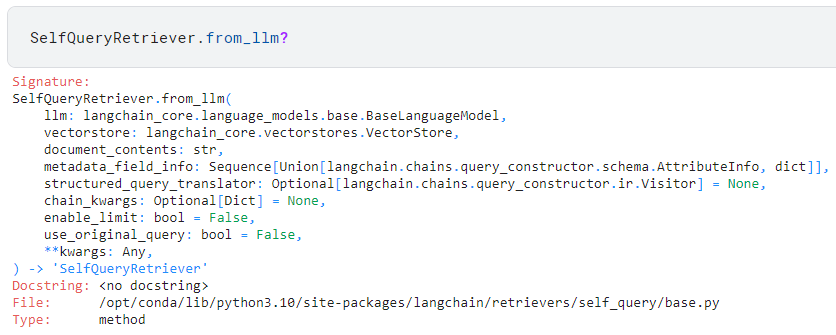

The only class method for the self query base class is from_llm. There are four required parameters to create a self query class: llm, vectorstore, document_contents, and metadata_field_info.

llmis for passing a language model.vectorstoreis used to pass a vector store- The name of the

document_contentsparameter is a bit misleading. It doesn’t refer to the actual contents of the stored documents but rather a short description of them. This is most confusing to us. document_content_description would be a better name. metadata_field_infois a sequence ofAttributeInfoobjects, dictionaries containing information about the data in the vector database. We define a list ofAttributeInfoobjects which contains information about what each attribute is and its datatype so that the language model knows what it is dealing with.

I am not going into the optional parameters.

Now, let us see how we handle the parameters. Based on the parameters passed in, we use a series of if statements to determine what to do. First, we check if there is an already defined structured query translator. If not, we use the built-in translator for the defined vector store. Next, we check the chain keyword arguments. We can set them to the values passed in or remain in an empty dictionary. We continue to check these arguments for the above mentioned two if statements.

The two keys we look for are the allowed comparators and operators. These keys determine how we can write the filter expressions.

With everything defined, we can now create our query constructor. We need to pass in the LLM, document content description, metadata fields, whether or not we want to enable the limit, and the keyword arguments to pass to the chain. After we have defined all these elements, the function returns a Runnable object, which allows us to execute a specified script.

query_constructor = load_query_constructor_runnable(

llm, ##llm

document_contents, #description of the documents

metadata_field_info, #metadata information

enable_limit=enable_limit,

**chain_kwargs

)At the end of this class method, we need to return the self query retriever. This method returns an instance of the self query class. We pass in the query constructor we just defined, along with the passed-in vector store, whether or not to use the original query, the translator, and a list of keyword arguments.

return cls(

query_constructor=query_constructor,

vectorstore=vectorstore, #vector database

use_original_query=use_original_query,

structured_query_translator=structured_query_translator,

**kwargs,

)Thus, this method allows us to create an RAG app by passing only four required fields. The LLM, the vector database, a description of the documents, and the metadata information.