Test Smarter: LLMs in Automation Made Easy

In the era of rapid digital transformation, the demand for faster software delivery without compromising quality has fueled innovations in test automation. One such groundbreaking advancement is the use of Large Language Models (LLMs) like OpenAI’s GPT series. While many are familiar with LLMs generating test scripts, the true potential of these models in test automation extends far beyond mere code generation. This blog explores how LLMs are revolutionizing test automation pipelines, test data management, failure analysis, and more.

What Are LLMs and Why Do They Matter in QA?

Large Language Models (LLMs) are deep learning models trained on vast corpora of data, capable of understanding and generating human-like text. Tools like ChatGPT, Claude, and Code Llama have already started influencing coding workflows—but their role in Quality Assurance (QA) is becoming increasingly significant.

- Speed: Automate test-related documentation, planning, and communication.

- Intelligence: Analyze patterns, predict failure points, and generate edge cases.

- Adaptability: Tailor test suggestions and improvements based on project-specific context.

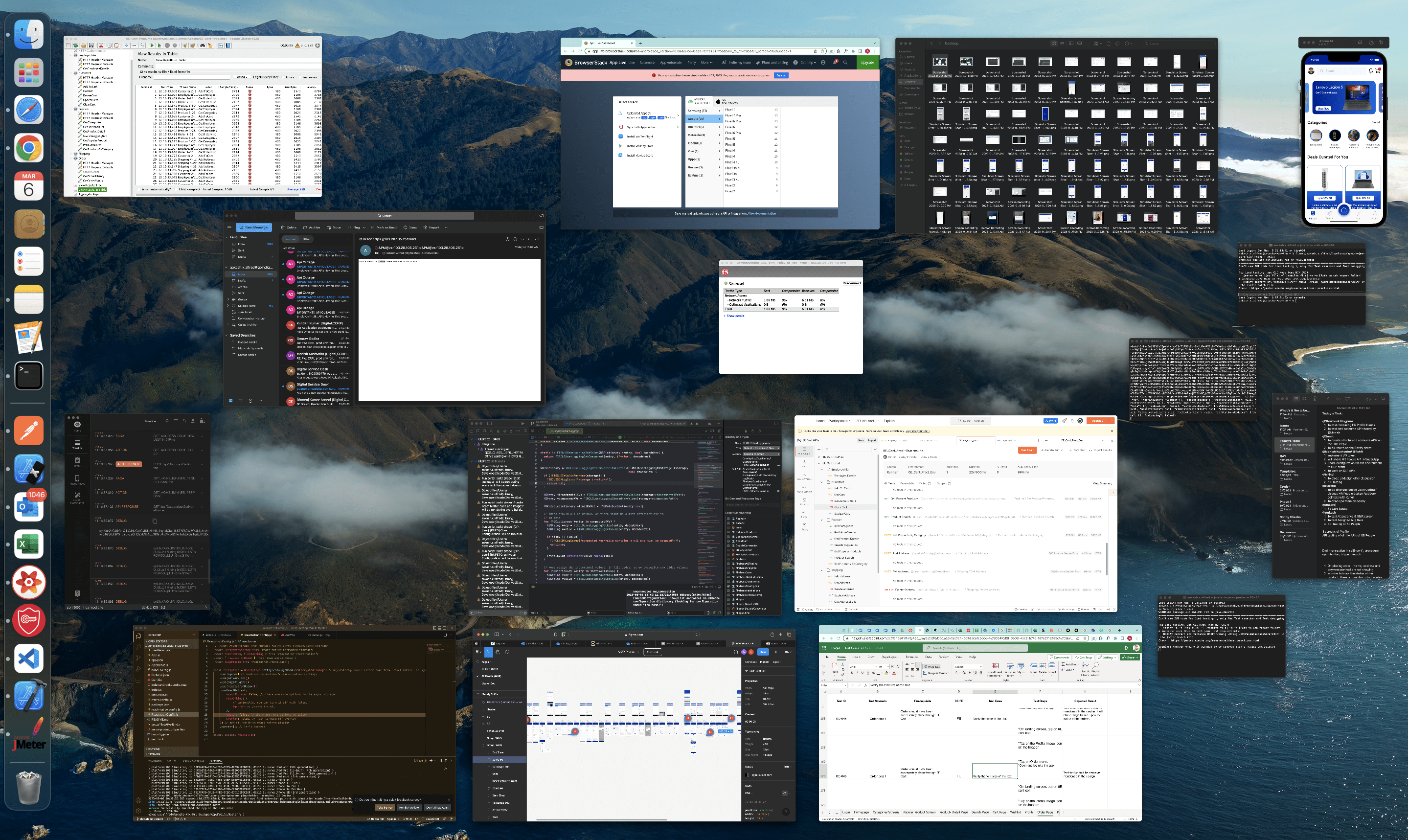

Key Use Cases of LLMs in Test Automation

1. Intelligent Test Case Generation: While basic test case generation is now commonplace, LLMs can:

- Generate context-aware test cases from requirements or user stories.

- Suggest missing edge cases or negative test scenarios.

- Convert manual test cases into automated scripts in Selenium, Robot Framework, etc.

2. Automated Test Data Creation: Data creation often becomes a bottleneck. LLMs can:

- Generate realistic, anonymized test data for different domains.

- Create complex data structures (e.g., nested JSON, XML) with validations.

- Assist in mocking APIs or simulating service responses.

3. Failure Root Cause Analysis: It is necessary to find the root cause for failure. For that, LLMs can:

- Analyze logs and tracebacks to suggest probable failure points.

- Recommend potential fixes or retry strategies.

- Summarize test reports in human-readable formats.

4. Test Documentation and Reporting: LLMs excel at:

- Creating and maintaining test plans, test strategies, and traceability matrices.

- Auto-generating executive summaries and QA reports from raw results.

Challenges and Considerations

While the benefits are promising, a few challenges persist:

- Data Privacy: LLMs must not expose sensitive project data.

- Accuracy: Generated scripts may require thorough validation.

- Context Limitation: Some LLMs still struggle with complex or lengthy inputs.

The Future of LLMs in QA

As LLMs become more fine-tuned and integrated into dev toolchains:

- Expect custom LLMs trained on internal test data.

- More tools will embed LLMs directly into CI/CD and IDEs.

- We’ll see a rise in collaborative AI agents aiding test planning, maintenance, and debugging.

Conclusion

The adoption of LLMs in test automation is more than a productivity hack—it’s a paradigm shift. By moving beyond just code generation, LLMs offer opportunities to reimagine how QA teams function in modern SDLCs. Early adopters who learn to harness their full capabilities will lead the next wave of intelligent software testing.